By industry

By initiatives

By industry

By initiatives

API Governance | Nov 24, 2023 | 12 min read | By Stefan Đokić

Stefan Đokić is a Senior Software Engineer and tech content creator with a strong focus on the .NET ecosystem. He specializes in breaking down complex engineering concepts in C#, .NET, and software architecture into accessible, visually engaging content. Stefan combines hands-on development experience with a passion for teaching, helping developers enhance their skills through practical, focused learning.

In the constantly evolving realm of software development, ensuring top-tier performance, especially for .NET APIs, is not just beneficial but essential. Whether you're navigating the intricacies of the .NET Core framework or focusing on the responsiveness of your ASP.NET Core Web API, it's paramount to be equipped with effective strategies for optimization.

As C# developers, we understand the thirst for knowledge in this domain. Thus, this article serves as a comprehensive guide, amalgamating insights from various performance enhancement methodologies, to supercharge your .NET API's speed, efficiency, and overall user experience. We'll delve deep into various techniques, from memory management to database optimizations, ensuring your applications remain a cut above the rest.

Dive in as we unravel the secrets to maximizing the prowess of your .NET applications!

Embarking on the journey of optimization without a roadmap can lead to wasted efforts, misallocated resources, and even unintended complications. Just as a doctor wouldn't prescribe medication without a proper diagnosis, developers shouldn't jump into optimization without a clear understanding of where the issues lie.

Here's a step-by-step guide to ensuring you're well-prepared before diving into the optimization process for your .NET API. I’m using these steps.

The first and foremost step is to know your starting point. What is the current performance of your API? Having a baseline allows you to measure improvements or regressions and gives you a clear point of reference.

Performance bottlenecks are specific areas or portions of your code that significantly degrade the overall performance. Pinpointing these areas ensures you target your optimization efforts where they can have the most impact.

Leveraging the right diagnostic tools can make a world of difference in your optimization journey. Here are some powerful tools that I use and can help you:

Not all bottlenecks are created equal. Some might be causing minor delays, while others could be responsible for significant slowdowns. Once you've identified these bottlenecks, prioritize them based on their impact on the overall user experience. This ensures that you address the most critical issues first, yielding the most significant benefits in the shortest amount of time.

Well, let me show you my optimization steps.

Utilizing the most recent .NET version is pivotal for optimal application performance. Here's why I think why:

Performance Boost: Newer versions come with refined and optimized runtime and libraries, leading to quicker code execution and better resource management.

Security: The latest versions encompass the most up-to-date security patches, minimizing vulnerabilities.

Bug Resolutions: Alongside security updates, newer versions rectify known issues, enhancing application stability.

Compatibility: Staying updated ensures your application is compatible with modern tools, libraries, and platforms.

Ensuring You Have the Latest Version:

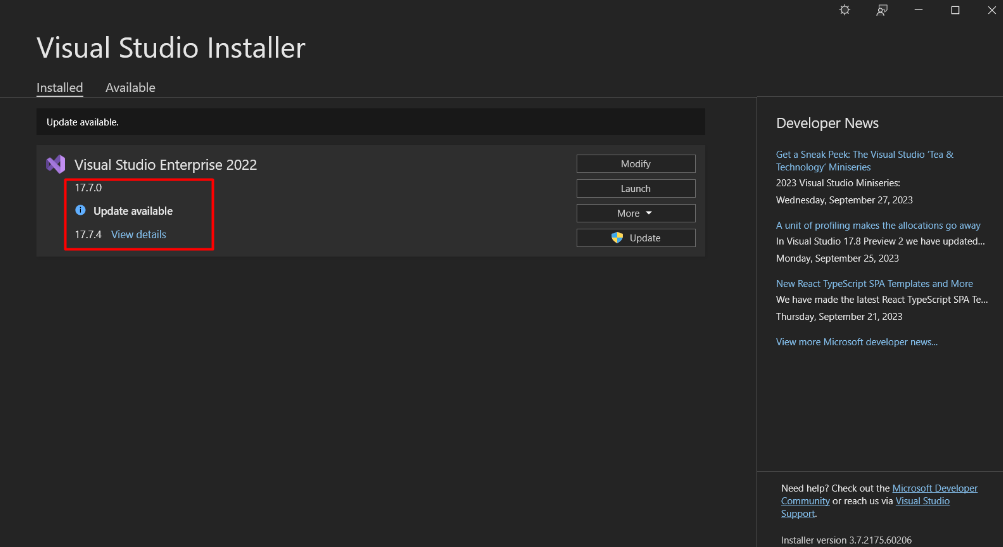

Update Visual Studio

Check the last stable version here: https://dotnet.microsoft.com/en-us/download/dotnet/7.0

Harnessing asynchronous programming, particularly async/await in .NET, is a game-changer for developing responsive and scalable applications.

Here's a comprehensive look, complete with a practical example.

Responsiveness: In UI applications, async keeps the user interface snappy, irrespective of lengthy operations.

Scalability: For services like web APIs, async operations allow the handling of more simultaneous requests by not waiting on tasks, such as database queries.I will show you an example below.

Resource Efficiency: Instead of tying up threads, asynchronous operations use resources more judiciously, freeing up threads for other tasks.

Let me show you an example: Imagine you have a web service that fetches user data from a database.

If you do not use async/await:

public User FetchUserData(int userId)

{

return Database.GetUser(userId); // This is a blocking call

}Why this can be a problem? If Database.GetUser takes a long time, the entire method will block, holding up resources.

The solution is to introduce async/await. Let me show you how to do that:

public async Task<User> FetchUserDataAsync(int userId)

{

return await Database.GetUserAsync(userId); // Non-blocking

}Here, the await keyword allows the system to offload the task, freeing it to handle other operations, and making the application more responsive and scalable.

Avoid Deadlocks: Be cautious not to blend synchronous and asynchronous code without due diligence. Avoid using .Result or .Wait() on tasks.

Caching has been one of my secret weapons in the realm of application performance, especially in scenarios that involve frequent data retrieval. Let me share a personal experience.

While building an e-commerce platform, I noticed that the site began to lag as the user base grew, especially on product pages. Whenever someone accessed a product, my system would dutifully fetch the details from the database. This repeated fetching started bogging down performance, elongating page load times, and leaving users visibly frustrated.

Upon analyzing, I observed a pattern: the exact product details were fetched multiple times for various users throughout the day.

A lightbulb moment occurred:

"What if I could temporarily store these details after the first retrieval, so the subsequent requests wouldn’t need to hammer the database?"

So, I created a caching strategy:

With caching, instead of always querying the database, the system first checks temporary storage (or cache) for product details. If the details are found in the cache, they're returned, bypassing the database entirely. If they aren't cached, they're retrieved from the database, presented to the user, and simultaneously stored in the cache for upcoming requests.

What I used and what can you use? I utilized .NET Core's built-in caching mechanism.

builder.Services.AddMemoryCache();public class ProductService

{

private readonly IMemoryCache _cache;

public ProductService(IMemoryCache cache)

{

_cache = cache;

}

public Product GetProductDetails(int productId)

{

return _cache.GetOrCreate(productId, entry =>

{

entry.SlidingExpiration = TimeSpan.FromMinutes(10); // Cache for 10 minutes

return FetchProductFromDatabase(productId); // My previous database fetch

});

}

}Noticeably Faster: My e-commerce site's response time improved drastically.

Reduced Database Stress: The reduced database hits ensured a longer, more efficient life for my database and its infrastructure.

Happy Users: The user experience was substantially smoother, leading to positive feedback.

In the digital world, data can be vast and endless. Presenting or processing all of it at once can be both overwhelming for users and taxing on system resources.

Pagination, the technique of dividing content into separate pages, is an essential solution for this challenge. My encounter with the necessity of pagination is an illustrative tale of how a simple design decision can profoundly impact user experience and system efficiency.

In one of the applications I was building, there was a feature to display a list of all users. As the user base grew exponentially, this list became extensive. Every time someone tried to view the list, the system would fetch every single user from the database, causing considerable lag. Not only was the server strained, but the user interface also became cluttered and difficult to navigate.

The Power of Pagination:

Recognizing the issue, I decided to implement pagination. Here's how I approached it:

Instead of fetching all records, I modified the database query to retrieve a specific number of users at a time, say 20 or 50, based on the desired page size.

The front end was adapted to display this limited set of records and provide navigation controls (like "Next", "Previous", "First", and "Last") to cycle through pages.

The API was adjusted to accept parameters for the current page and the number of records per page, allowing dynamic retrieval based on user interaction.

Let’s bring the code:

public List<User> GetPaginatedUsers(int pageNumber, int pageSize)

{

return dbContext.Users

.Skip((pageNumber - 1) * pageSize)

.Take(pageSize)

.ToList();

}So, what have I got here?

With fewer records displayed at once, users could navigate and find the information they needed more comfortably.

Fetching limited records reduced the strain on the server and the database, ensuring faster response times.

Transferring fewer records meant reduced data transfer over the network, resulting in quicker load times and less bandwidth consumption.

During the development of an application, I implemented rigorous error handling. To ensure no critical issue went unnoticed, I liberally sprinkled exception handling across various layers of the application. The application ran smoothly, but as the load increased, I noticed a slight degradation in performance.

Diagnostics revealed a substantial number of exceptions were being thrown and caught, many of which were non-critical, like trying to find an item in a collection and throwing an exception if it wasn't present. While this approach made the application robust against unforeseen issues, it inadvertently added overhead.

What did I find as a solution?

A Result object encapsulates the outcome of an operation in a clean and expressive manner. It's designed to convey the result explicitly, without relying on exceptions. The beauty lies in its simplicity:

public class Result<T>

{

public bool IsSuccess { get; }

public T Value { get; }

public string Error { get; }

protected Result(T value, bool isSuccess, string error)

{

IsSuccess = isSuccess;

Value = value;

Error = error;

}

public static Result<T> Success(T value) => new Result<T>(value, true, null);

public static Result<T> Failure(string error) => new Result<T>(default(T), false, error);

}Okay, so what can you do with this? If we take some real-world examples. Let me show you.

Traditional approach with exceptions:

public User GetUser(int userId)

{

if(!database.Contains(userId))

{

throw new UserNotFoundException();

}

return database.GetUser(userId);

}With the Result paradigm:

public Result<User> GetUser(int userId)

{

if(!database.Contains(userId))

{

return Result<User>.Failure("User not found.");

}

return Result<User>.Success(database.GetUser(userId));

}So why use the Result object?

A Content Delivery Network (CDN) is a collection of servers distributed across various global locations. These servers store cached versions of your static and sometimes dynamic content. When a user makes a request, instead of accessing your primary server, they are routed to the nearest CDN server, resulting in significantly faster response times.

If you are using third-party libraries for CSS and JavaScript that have CDN versions available, then try using the CDN file path rather than the downloaded library file. A CDN can serve static assets like images, stylesheets, and JavaScript files from locations closer to the user, reducing the time needed for these assets to travel across the network.

Example:

<!-- Include static assets from a CDN -->

<link rel="stylesheet" href="https://cdn.example.com/styles.css">

<script src="https://cdn.example.com/script.js"></script>Optimizing API performance is essential in our digital world. Throughout our exploration of strategies—from the latest .NET versions to CDN integration—it's evident that an efficient API requires consistent monitoring and adaptive strategies. Tools and practices like caching, database optimization, and asynchronous programming showcase the evolution and depth of performance enhancement techniques.

However, the key takeaway is the continuous nature of this journey. Performance isn't a one-time achievement but an ongoing commitment. Regular updates, vigilant monitoring, and in-depth analysis are crucial. As user expectations grow and the tech landscape shifts, staying proactive and user-centric will ensure that APIs consistently deliver top-notch performance.

API Governance

API GovernanceThis guide walks through 10 concrete Enterprise API Governance strategies to build an API governance framework that focuses on automation over manual gatekeeping, using contracts, policy-as-code, and AI-driven insights

API Governance

API GovernanceManaging APIs at scale is harder than ever. Manual governance can’t keep up with growing complexity, compliance demands, and security risks. In this article, we explore how AI can transform API governance—making it smarter, faster, and fit for modern teams.

API Governance

API GovernanceAPIs are the backbone of modern systems—but without governance, they can become your biggest liability. In this guide, we break down how engineering and product teams can avoid costly breaches by building API governance into every stage of the lifecycle.