By industry

By initiatives

By industry

By initiatives

API Design | Nov 12, 2025 | 14 min read | By Savan Kharod | Reviewed by Bhushan Gaikwad

Savan Kharod works on demand generation and content at Treblle, where he focuses on SEO, content strategy, and developer-focused marketing. With a background in engineering and a passion for digital marketing, he combines technical understanding with skills in paid advertising, email marketing, and CRM workflows to drive audience growth and engagement. He actively participates in industry webinars and community sessions to stay current with marketing trends and best practices.

Implementing protections like rate limiting and throttling is essential for maintaining the integrity and performance of your services. These strategies help ensure that your APIs remain reliable and available, even under heavy loads.

Rate limiting focuses on fairness, preventing any single user from monopolizing resources. This approach ensures that all users have equal access to your API, which is crucial for user satisfaction. On the other hand, throttling works behind the scenes, stabilizing your backend by controlling the flow of requests over time. It's less visible to users but vital for system resilience and longevity.

In this article, we will explore the key differences between rate limiting and throttling, and understand when it makes sense to implement one.

API rate limiting is a technique used to control the number of requests a user can make to an API within a specified time frame. This ensures that no single user can overwhelm the system, promoting fairness and stability across all users. By setting limits, you can prevent abuse and maintain optimal performance for everyone accessing the API.

This method is vital for protecting your resources and ensuring they are available when needed. Rate limiting enables developers to define thresholds, like allowing a specific number of requests per minute or hour. This not only enhances user experience but also helps in maintaining the overall health of the API system.

Rate limiting is ideal for scenarios where you need to ensure fairness among users. For example, if you're running a public API that serves a large number of developers, implementing rate limiting prevents any single user from hogging resources, keeping the experience smooth for everyone.

API throttling is a technique designed to control the flow of requests to an API over time. Unlike rate limiting, which restricts users to a maximum number of requests in a specific period, throttling smooths out the traffic by allowing requests at a steady rate. This helps prevent server overload and ensures that resources are used efficiently.

The key benefit of throttling is its ability to maintain system performance during peak usage times. Regulating how requests are handled allows the backend to manage load effectively, reducing the risk of crashes or slowdowns. This behind-the-scenes approach is crucial for preserving a seamless user experience, especially during high-demand periods like product launches or events

Throttling shines in scenarios that require backend stability. Think of high-traffic events, such as flash sales or product launches, where managing the flow of requests helps maintain performance and prevents server overload.

Rate limiting and throttling are two essential mechanisms that serve distinct purposes in API management. Here’s a strategic breakdown of the key difference between Rate Limiting vs Throttling:

| Aspect | Rate Limiting | Throttling |

|---|---|---|

| Primary intent | Enforce a hard cap on how many requests a client (token/user/IP) may make over a time window to preserve fairness and prevent abuse. | Shape traffic to a target rate so the system stays stable during spikes—by delaying, shedding, or otherwise pacing requests. |

| What happens when exceeded | Excess requests are typically rejected with HTTP 429 Too Many Requests; responses often include Retry-After to tell the client when to try again. | Excess load is slowed, queued, or dropped. Servers may return 429 (asking the client to slow down) or 503 Service Unavailable during overload/load-shedding. |

| Typical scope | Per API key/user/IP, per route, or global. Public APIs often expose per-endpoint or per-API limits and tell clients their remaining budget via headers. | Often system-level (protect shared resources or backends), but can be applied per client or route to smooth bursts. Providers may implement throttling behind the scenes at gateways/CDNs. |

| Common algorithms | Token Bucket (allows bursts), Sliding Window (log/counter), Fixed Window. | Frequently Leaky Bucket-style pacing or queue-based shaping; can pair with load-shedding when queues grow. |

| Burst behavior | Depends on algorithm: token bucket permits short bursts until tokens deplete; fixed windows can allow boundary bursts. | Intentionally smooths bursts (steady outflow) or clips them via shedding when necessary. |

| Client experience | Immediate errors after the budget is exhausted; the client should back off per Retry-After/reset time. Many APIs expose X-RateLimit-*/RateLimit-* headers. | Latency may increase; some calls fail fast with 429/503 during overload. Clients should implement exponential backoff + jitter and idempotent retries. |

| Typical headers | Standardizing: RateLimit-Policy, RateLimit (IETF draft). De-facto: X-RateLimit-Limit, X-RateLimit-Remaining, X-RateLimit-Reset; Retry-After. | Same headers may be present when a throttle triggers; 503 overloads often omit per-client budgets but may still include Retry-After. |

| Status codes you’ll see | 429 with Retry-After; sometimes provider-specific headers indicating remaining budget/time to reset. | 429 (ask the client to slow down) or 503 (temporary overload/load shedding). |

| Where enforced | CDN/WAF, API gateway, service layer. Example: Cloudflare rate-limiting rules; many APIs (GitHub/Stripe) expose limits and remaining budget. | Same layers as rate limiting, plus autoscaling/load-shedding controllers in the platform. |

| Config style | “100 requests/min per token,” “1,000/day per org,” etc. Window types: fixed, sliding, and token budget. | “Sustain 200 rps, burst 400; drop or queue overflow.” Cloud vendors often expose steady‐state rate + burst knobs. |

| Provider terminology | Many docs treat rate limiting as quotas/budgets. | Some providers use “throttling” to mean rate limiting; e.g., AWS API Gateway exposes rate and burst under “throttling.” |

Idea: Count requests per key (API key/user/IP/route) within a discrete window (e.g., 1 minute).

How it works:

Compute the current window key (e.g., user123:window:2025-11-07T18:30:00Z).

Atomically increment the counter on each request; set TTL to the window length when first created.

If counter > limit → return 429 and include Retry-After for the next reset.

(Optional) Reduce boundary bursts by layering multiple windows (minute + hour).

Where to enforce: Reverse proxy/gateway/service. Azure APIM: rate-limit / rate-limit-by-key.

Idea: Enforce a limit over the last N seconds/minutes with less burstiness than fixed windows.

Two common forms:

Sliding window log: store timestamps (e.g., Redis Sorted Set); on each request, evict old entries < now - window, then check size. Precise but higher memory.

Sliding window counter: keep counts for the current and previous sub-windows; weight the previous window proportionally to time elapsed (approximate, low memory).

Where to enforce: Gateway/service with shared store (Redis) for consistency across instances.

Idea: Tokens refill at a rate r; the bucket size b defines the maximum burst. A request consumes tokens; if none are left, reject or delay.

How it works:

Compute tokens = min(b, tokens + r * dt) on each check; if tokens >= cost, allow and decrement, else block/slowWhere to enforce:

Envoy/Istio: local rate-limit HTTP filter uses a token bucket per route/host.

AWS API Gateway: exposes rate (steady RPS) + burst (bucket size) as “throttling.

Idea: When backends are saturated, enqueue requests and drain at a safe rate; drop if the queue is full or wait exceeds a timeout.

How it works:

Define max concurrency to the upstream and a bounded queue with a timeout.

If the queue is full or a timeout exceeded → fail fast (usually 429 or 502/503, depending on layer).

NGINX upstream can queue excess connections/requests with a max length + timeout.

Azure APIM offers limit-concurrency (cap in-flight); when exceeded it returns 429 immediately (no built-in queue).

Idea: Multiple queues by priority/tier (e.g., “Gold/Silver/Bronze”) with fair queuing; drain high-priority first, protect low-priority from starvation.

How it works:

Classify requests (header/key/plan) → map to a priority level.

Maintain per-priority queues; choose the next request using weighted/fair queuing.

Kubernetes API Priority and Fairness (APF) is a good reference: priority levels + fair queuing to protect critical traffic.

Idea: Smooth spikes so downstream sees a near-constant rate.

How it works:

Leaky bucket: queue + constant drain rate (classical traffic shaper). Used by NGINX limit_req (effectively leaky-bucket).

Token bucket at the edge/gateway can also smooth while allowing bounded bursts (see above). Envoy/Istio and AWS use token-bucket semantics.

Done right, API rate limiting and API throttling can protect performance without punishing legitimate clients. Here are some of the best practices to do it right:

Document who gets how much, and where. Combine plan-level limits (per token/user/IP) with endpoint-level rules (e.g., tighter caps on /login or /search). On managed gateways, this is straightforward: Azure API Management exposes rate-limit and rate-limit-by-key policies for coarse and per-key caps that return 429 Too Many Requests when exceeded.

For broader fairness and abuse resistance, prefer multiple windows (per-minute + per-hour) or sliding windows (smoother enforcement). Kong’s Rate Limiting Advanced supports multiple window sizes and sliding windows out of the box.

When a client exceeds a limit, respond with HTTP 429 and tell them when to try again using Retry-After; both behaviors are standardized (429 in RFC 6585, Retry-After in RFC 9110).

Expose budgets so well-behaved clients can self-throttle. Many providers send X-RateLimit-*/Retry-After; an IETF draft defines RateLimit and RateLimit-Policy header fields to standardize this.

For simple, documented caps, fixed or sliding windows are easy to reason about (sliding avoids boundary bursts). Kong implements both.

For bursty-but-bounded traffic, use a token bucket: allow short bursts, refill at a steady rate. Envoy/Istio’s local rate-limit filter is token-bucket-based, and AWS API Gateway exposes rate (steady RPS) and burst (bucket size) using the same model.

To throttle spikes into a smooth outflow at the edge, use leaky-bucket pacing. NGINX limit_req implements a leaky bucket with optional burst/nodelay controls.

Throttling isn’t about blocking everything; it’s about staying within safe concurrency and absorbing bursts:

Cap in-flight requests with limit-concurrency in Azure APIM; excess requests fail fast with 429 (no unbounded pile-ups).

At the proxy, add bounded queues where appropriate; NGINX can queue and pace requests while backends catch up. If the system is truly overloaded, respond 503 Service Unavailable, optionally with Retry-After per RFC 9110, to advertise temporary overload.

Protect your platform by making the “right thing” easy for consumers:

Honor headers: when X-RateLimit-Remaining hits 0 or Retry-After is present, wait—this is exactly how GitHub instructs clients to behave.

Retry responsibly: use exponential backoff with jitter to avoid thundering herds; AWS documents this pattern and many SDKs implement it.

Make retries safe: support idempotency keys for POST/PATCH so clients can retry without duplicating work (Stripe’s Idempotency-Key).

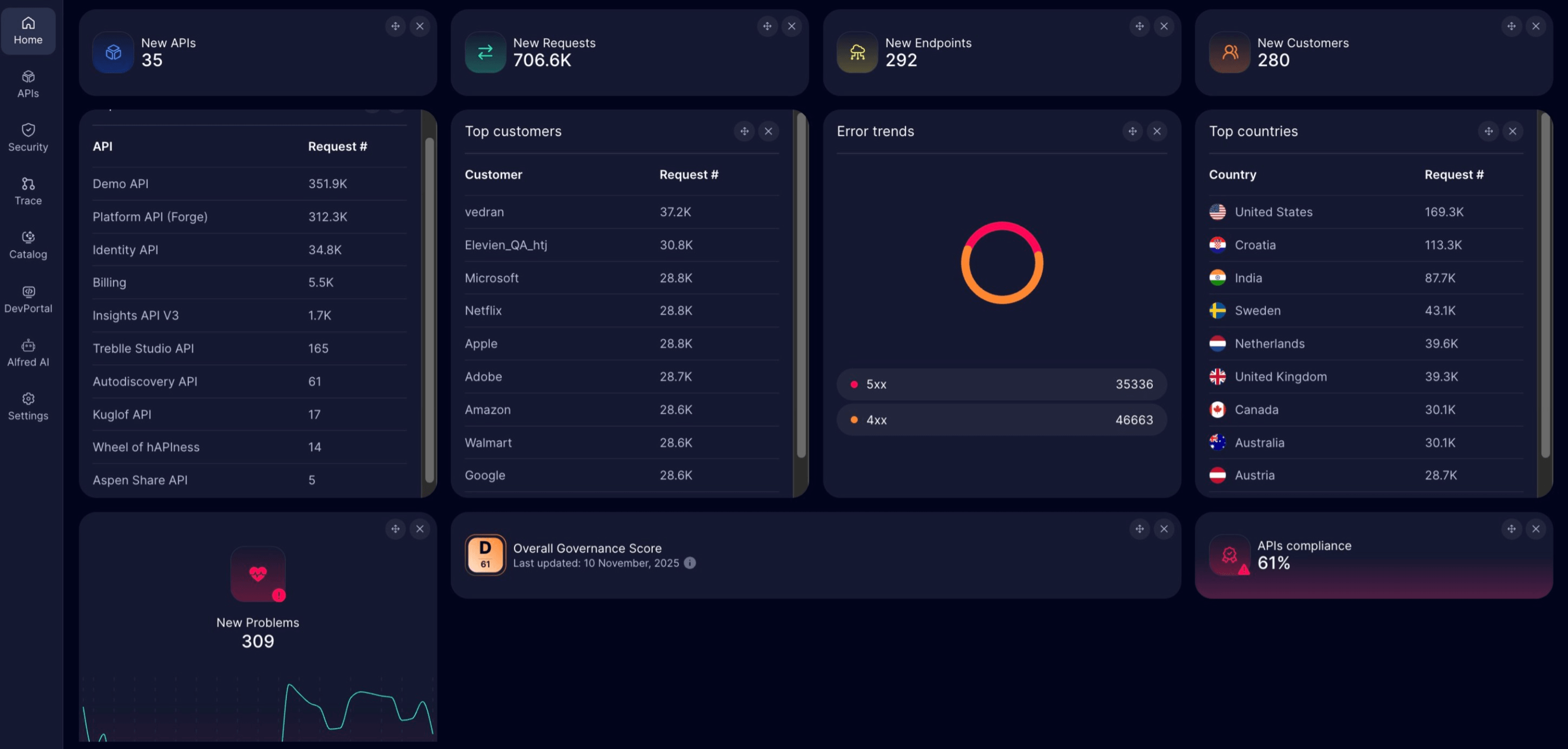

You can’t tune what you don’t observe. Track RPS per consumer/route, 429/503 rates, queue delay, and tail latency. An observability layer like Treblle collects rich request-level data in real time and surfaces spikes, headers, and anomalies, useful for correlating rate-limit events with consumer behavior.

Treblle also includes governance and security features that look for rate-limit headers and potential abuse signals, helping you spot evasion (rotating IPs/keys) and tighten counter keys (token + user + IP) where appropriate.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Not all requests are equal. Use priority tiers and fair-queuing so critical operations (e.g., webhooks, auth) keep moving during spikes. Kubernetes’ API Priority and Fairness (APF) is a concrete reference: it assigns requests to priority levels and uses fair queues to prevent starvation under load, a pattern you can emulate at your gateway.

Stage spike tests to confirm clients see 429 with a meaningful Retry-After, throttling keeps p95/p99 within SLOs, and queues never grow unbounded. Validate edge pacing (e.g., NGINX leaky-bucket) and gateway token buckets (AWS/Envoy) behave as intended for each route.

When deciding between rate limiting and throttling, it’s essential to consider your API's specific needs. Rate limiting is best suited for scenarios where fairness is paramount, ensuring that no single user can dominate the resource pool. This approach is particularly effective for public APIs where diverse users interact, promoting a smooth experience for all.

On the other hand, throttling excels in managing backend stability, especially during peak usage times. By controlling the flow of requests, it prevents server overload and maintains performance during high-demand events.

Understanding these differences can help you implement the right strategy that not only protects your API but also enhances the overall user experience.

Rate limiting for subscription-based APIs is crucial for managing user access and ensuring that services remain fair and efficient. By setting specific request limits based on user subscription tiers, you can control resource usage effectively.

This means that higher-paying users might enjoy more generous limits, while free-tier users have restricted access.

This structured approach not only enhances user satisfaction but also ensures that your systems can handle varying loads. With real-time analytics from Treblle, you can monitor user behavior and adjust these limits as needed, ensuring a smooth experience for all subscribers.

Throttling becomes especially critical for high-traffic and real-time systems. When user demand surges, like during flash sales or major product launches, a sudden influx of requests can overwhelm your API. Throttling helps manage this by controlling the rate of incoming requests, ensuring that the backend remains responsive without crashing.

By smoothing out the request flow, throttling enhances the user experience while protecting system integrity. It enables your APIs to handle spikes efficiently, allowing users to enjoy seamless interactions even under heavy load.

With Treblle, you can monitor these high-traffic periods in real-time, optimizing your throttling strategies to keep everything running smoothly.

Treblle’s API Intelligence Platform is your go-to solution for seamlessly managing rate limiting and throttling. With real-time analytics, you can monitor API requests and user behavior effortlessly, allowing you to set the right thresholds that enhance performance while ensuring fairness.

By integrating Treblle, you can effortlessly adjust your rate limits and throttling strategies based on real-time data. This not only aids in preventing abuse but also helps maintain a smooth user experience, even during high-demand periods.

With actionable insights at your fingertips, you can make informed decisions that keep your APIs robust and reliable, adapting to user needs as they evolve

Implementing governance and discoverability in your API management strategy is essential for maintaining a well-organized infrastructure.

By establishing clear guidelines and policies, you can ensure that your APIs are not only secure but also easy to navigate. This promotes better collaboration among development teams and enhances the overall user experience.

Bring policy enforcement and control to every stage of your API lifecycle.

Treblle helps you govern and secure your APIs from development to production.

Explore Treblle

Bring policy enforcement and control to every stage of your API lifecycle.

Treblle helps you govern and secure your APIs from development to production.

Explore Treblle

With Treblle, you gain tools that facilitate real-time monitoring and detailed logging, making it easier to keep track of API usage and performance. This level of transparency enables teams to pinpoint areas for improvement and make informed decisions regarding governance.

Discoverability features also enable users to find and access APIs more efficiently, promoting better integration and utilization across projects.

Understanding the differences between API rate limiting and throttling is essential for building resilient and user-friendly services.

While rate limiting ensures fair access for all users, throttling manages the flow of requests to prevent server overload. Together, these strategies create a balanced environment that enhances performance and user satisfaction.

By implementing both techniques, you can safeguard your API from abuse while maintaining a seamless user experience. With Treblle’s real-time analytics, you can easily monitor and adjust these strategies to adapt to changing demands, ensuring that your APIs remain robust and reliable in any scenario.

This proactive approach not only strengthens your API's defenses but also fosters trust and loyalty among your users.

API Design

API DesignDiscover the 13 best OpenAPI documentation tools for 2026. Compare top platforms like Treblle, Swagger, and Redocly to improve your DX, eliminate documentation drift, and automate your API reference.

API Design

API DesignAPI authorization defines what an authenticated user or client can do inside your system. This guide explains authorization vs authentication, breaks down RBAC, ABAC, and OAuth scopes, and shows how to implement simple, reliable access control in REST APIs without cluttering your codebase.

API Design

API DesignUnmanaged API growth produces shadow endpoints you can’t secure or support. This guide explains how sprawl creates blind spots, the security and compliance risks, and a practical plan to stop it at the source.