API Design | May 9, 2025 | 10 min read | By Savan Kharod

Savan Kharod works on demand generation and content at Treblle, where he focuses on SEO, content strategy, and developer-focused marketing. With a background in engineering and a passion for digital marketing, he combines technical understanding with skills in paid advertising, email marketing, and CRM workflows to drive audience growth and engagement. He actively participates in industry webinars and community sessions to stay current with marketing trends and best practices.

As AI applications become increasingly sophisticated, developers are exploring architectural paradigms that can efficiently support complex, context-aware interactions. Two prominent approaches have emerged: the Model Context Protocol (MCP) and Serverless APIs.

The serverless computing market is experiencing significant growth, with a valuation of $24.51 billion in 2024 and projected to reach $52.13 billion by 2030, growing at a compound annual growth rate (CAGR) of 14.1%. This surge is driven by enterprises' digital transformation initiatives and the increasing adoption of cloud technologies.

Simultaneously, the Model Context Protocol (MCP), introduced by Anthropic in late 2024, is gaining traction among AI developers for its ability to connect AI models with everyday apps and data sources efficiently. Early adopters, such as Block and Apollo, have integrated MCP into their systems, thereby enhancing the capabilities of their platforms.

In this article we will examine the distinctions, use cases, and potential synergies between Model Context Protocol (MCP) and Serverless APIs in the context of AI applications.

💡

Building AI-ready APIs? Treblle gives you real-time insights, analytics, and documentation to understand and improve every API call—whether you’re using MCP, Serverless, or both.

The Model Context Protocol (MCP) is an open standard developed by Anthropic to streamline the integration of AI models with external data sources and tools. Think of MCP as a universal adapter, akin to a USB-C port, that allows AI applications to seamlessly connect with various systems without the need for custom integrations.

MCP operates on a client-server architecture, where AI applications (clients) communicate with MCP servers that expose specific capabilities, such as accessing databases, file systems, or APIs. This standardized approach simplifies the development of context-aware AI applications, enabling them to retrieve and utilize real-time information effectively.

Serverless APIs are application programming interfaces that run on serverless computing platforms, such as AWS Lambda, Azure Functions, or Google Cloud Functions. In this model, developers can deploy code that automatically scales and executes in response to events, eliminating the need to manage the underlying server infrastructure.

Serverless APIs are particularly well-suited for event-driven applications, offering benefits like automatic scaling, reduced operational overhead, and cost efficiency. They enable developers to focus on writing code that responds to specific triggers, such as HTTP requests or database changes, while the cloud provider handles provisioning and scaling of resources.

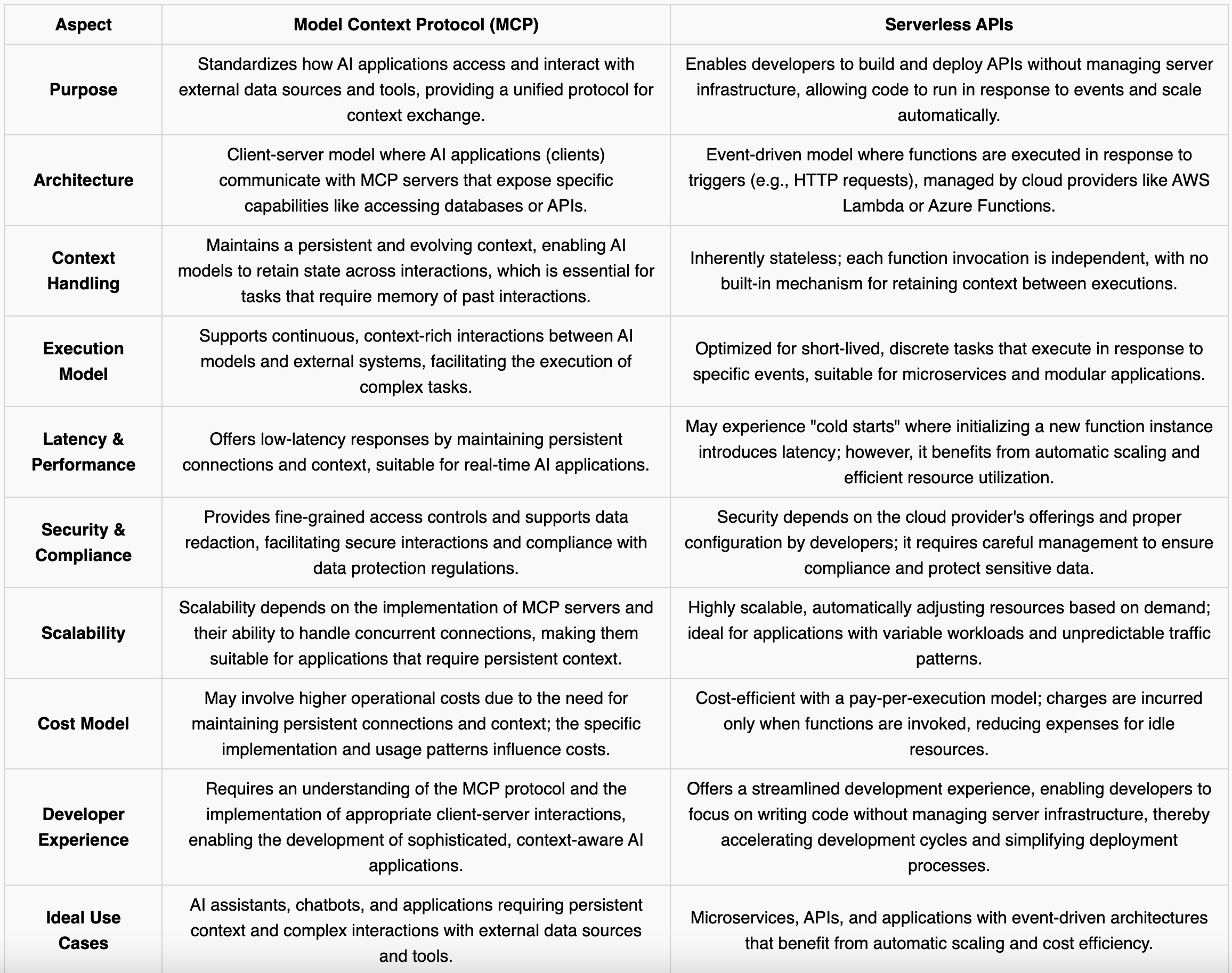

When evaluating MCP vs Serverless APIs, it is essential to understand their unique strengths and how they cater to different aspects of AI applications. While MCP excels in providing a structured and evolving context for AI models, as further outlined in this comparison with traditional APIs, Serverless APIs offer scalable, event-driven execution, making them ideal for handling asynchronous tasks..

The Model Context Protocol (MCP) is designed to supply AI models with structured, relevant, and evolving context, which is crucial for real-time, secure, and performant AI behavior. Here are some scenarios where MCP shines:

Serverless APIs operate on infrastructure that auto-scales and abstracts away server management, making them ideal for event-driven or bursty workloads. They are instrumental in the following AI application scenarios, especially when leveraging the best machine learning APIs for data preprocessing and orchestration:

When evaluating MCP vs Serverless APIs for AI applications, it's essential to consider various factors that influence performance, scalability, security, developer experience, and cost. Understanding these aspects will help in selecting the appropriate architecture or a combination of both to meet specific application requirements.

In the evolving landscape of AI application development, integrating the Model Context Protocol (MCP) with Serverless APIs presents a powerful approach to building scalable, context-aware, and efficient systems—especially when powered by the best AI APIs suited for your use case.

While MCP provides structured and evolving context to AI models, Serverless APIs offer event-driven, scalable execution. Combining these two can lead to robust AI solutions that leverage the strengths of both architectures.

Serverless functions can be employed to handle events that necessitate context updates in AI applications. For instance, when a new document is uploaded to a storage system, a serverless function can process this event and update the relevant context in the MCP server, ensuring that the AI model has access to the most recent information. This event-driven approach ensures that context remains current without manual intervention.

MCP excels in maintaining real-time, context-rich interactions, but specific tasks, such as data preprocessing or long-running computations, are better suited for asynchronous execution. Serverless functions can handle these tasks independently and update the MCP server upon completion. This separation of concerns allows AI applications to remain responsive while offloading resource-intensive operations to serverless functions.

Integrating external services, such as third-party APIs or databases, can be efficiently managed using serverless functions. These functions can act as intermediaries, fetching data from external sources and formatting it appropriately before updating the MCP server. This approach simplifies the integration process and ensures that AI models have access to a wide range of external data sources.

Combining MCP with Serverless APIs promotes a modular architecture where each component has a well-defined role. MCP focuses on maintaining context and facilitating AI interactions, while serverless functions handle discrete tasks and integrations. This separation enhances maintainability, as updates or changes to one component have minimal impact on others.

Serverless architectures offer cost-effective scaling by charging only for actual usage. By offloading specific tasks from MCP to serverless functions, organizations can optimize resource utilization and reduce operational costs. This combination ensures that AI applications can scale efficiently without incurring unnecessary expenses.

In the evolving landscape of AI application development, the choice between Model Context Protocol (MCP) and Serverless APIs isn't necessarily an either-or decision. Each offers unique advantages and has its own set of limitations.

By thoughtfully integrating both architectures, developers can build AI applications that are both contextually intelligent and highly scalable. This hybrid approach enables the creation of robust, efficient, and maintainable AI solutions that adapt to a wide range of use cases and workloads—an approach aligned with the principles of AI-first API design.

At Treblle, we understand the complexities involved in managing APIs and integrating emerging protocols, such as MCP. Our platform provides real-time API Intelligence, documentation, and analytics, enabling developers to focus on building innovative AI solutions without being hindered by infrastructure challenges. Whether you're leveraging MCP, Serverless APIs, or a combination of both, Treblle provides the tools and insights needed to streamline your development process.

As the AI ecosystem continues to mature, embracing the synergy between MCP and Serverless architectures will be key to unlocking the full potential of AI applications. By leveraging the strengths of both, developers can create intelligent, responsive, and scalable solutions that meet the demands of today's dynamic technological landscape.

💡

Ready to bring observability and intelligence to your AI workflows? Treblle helps you track, debug, and optimize every API in real time—so you can focus on building, not babysitting infrastructure.

API Design

API DesignDiscover the 13 best OpenAPI documentation tools for 2026. Compare top platforms like Treblle, Swagger, and Redocly to improve your DX, eliminate documentation drift, and automate your API reference.

API Design

API DesignAPI authorization defines what an authenticated user or client can do inside your system. This guide explains authorization vs authentication, breaks down RBAC, ABAC, and OAuth scopes, and shows how to implement simple, reliable access control in REST APIs without cluttering your codebase.

API Design

API DesignRate limiting sets hard caps on how many requests a client can make; throttling shapes how fast requests are processed. This guide defines both, shows when to use each, and covers best practices.