AI | Oct 15, 2025 | 14 min read | By Savan Kharod

Savan Kharod works on demand generation and content at Treblle, where he focuses on SEO, content strategy, and developer-focused marketing. With a background in engineering and a passion for digital marketing, he combines technical understanding with skills in paid advertising, email marketing, and CRM workflows to drive audience growth and engagement. He actively participates in industry webinars and community sessions to stay current with marketing trends and best practices.

API adoption velocity directly correlates with developer productivity and integration success. Modern development teams require APIs that minimize onboarding friction and accelerate time to production. According to the reports, 89% of developers now use AI tools in their workflow. Yet, only 24% of APIs are designed with AI agent compatibility in mind, creating a significant gap between developer expectations and available tooling.

Documentation quality remains the primary factor in API adoption decisions. Over 80% of developers report that clear, accessible documentation heavily influences whether they choose to integrate an API.

However, documentation alone is insufficient. APIs must now support AI code generation tools like Cursor and Replit, which require publicly accessible documentation to function effectively. As Dharmesh Shah, CTO of HubSpot, notes:

"Your API docs should be publicly accessible, not locked up behind a login... In the age of AI, inaccessible docs can be an obstacle to adoption."

Time to First Call (TTFC) serves as the critical metric for measuring onboarding efficiency. This metric tracks the duration from developer registration to their first successful API request. Organizations that systematically reduce TTFC see measurable improvements in adoption rates, reduced support overhead, and higher developer satisfaction.

Tracking TTFC alongside related metrics, such as developer drop-off rate and support tickets per integration, provides actionable insights into onboarding bottlenecks.

In this article, we will discuss six essential ways to reduce the Time to First Integration and understand why it’s a success metric. Let’s get started:

Manual API documentation maintenance creates a persistent lag between code changes and documentation updates. This synchronization problem leads to integration errors, increased support tickets, and developer frustration.

Automated documentation systems eliminate this gap by generating accurate, up-to-date documentation directly from API traffic and schema definitions.

Real-time schema detection monitors API requests and responses to automatically detect endpoint changes, parameter updates, and response structure modifications. This approach ensures documentation accuracy without manual intervention.

Automated documentation systems also maintain consistency across all endpoints. By enforcing standardized formats and structure, these tools reduce the cognitive load on developers who need to understand multiple endpoints quickly. The documentation becomes a single source of truth that developers can rely on during integration.

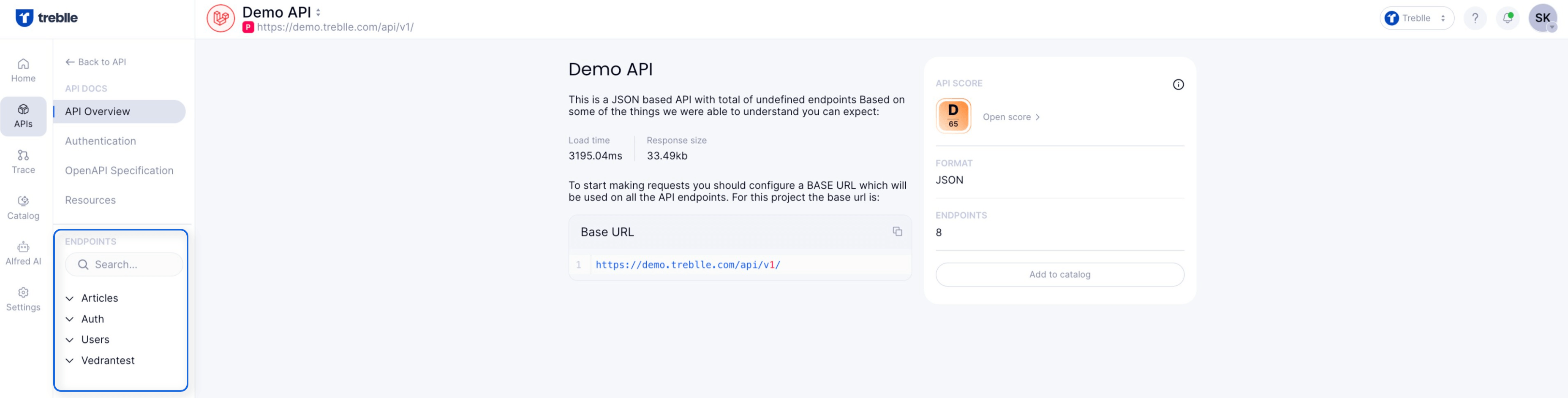

Treblle's API Intelligence Platform automates documentation generation by monitoring API traffic. The platform captures every request and response, extracting schema information to build comprehensive endpoint documentation without requiring manual input from development teams.

Real-time schema detection identifies API changes as they occur in production. When endpoints are added, modified, or deprecated, the documentation updates automatically.

This eliminates version drift and ensures developers always have access to current API specifications. The system generates OpenAPI-compliant specifications that integrate with standard developer tools and workflows.

The platform categorizes endpoints logically, groups related resources, and generates inline examples from actual API usage. This approach produces documentation that reflects real-world usage patterns rather than theoretical implementations, making integration more straightforward for developers.

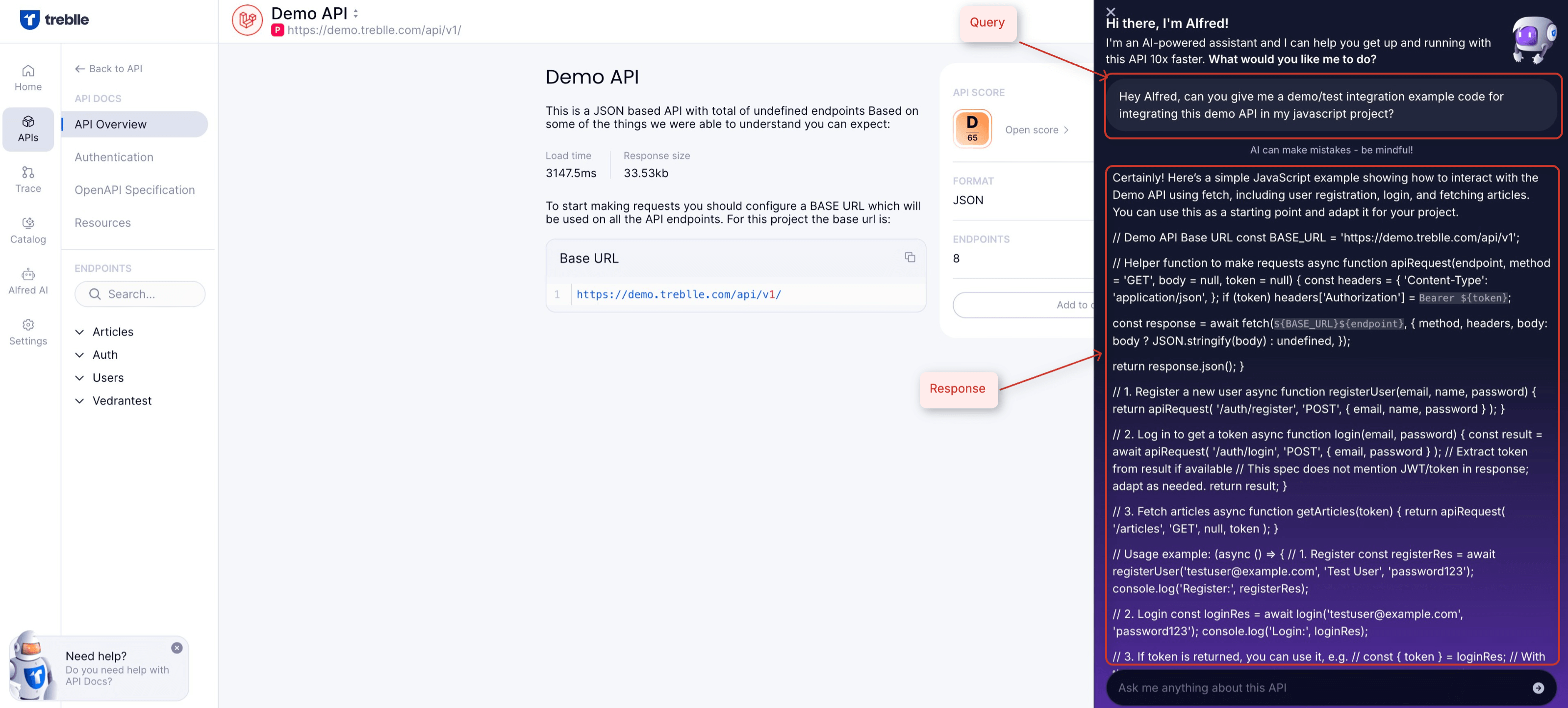

Understanding API docs is one thing, and getting working code from them is another. That’s where Alfred AI comes in.

Alfred AI analyzes API traffic patterns to generate context-appropriate integration codes. By examining successful request patterns, the system identifies common use cases and produces code that matches the implementation scenarios. This reduces the time developers spend translating abstract documentation into working code.

What sets Alfred apart from a code snippet bot is its multi-language support. Whether your developers work in JavaScript, Python, .NET, or other frameworks, Alfred will output idiomatic code. Teams spend less time translating example pseudocode and more time building actual code.

By embedding this intelligent generation directly in your dev portal or documentation UI, Treblle helps your teams (and external users) progress from reading to integrating faster, lowering onboarding time, and reducing integration errors from mismatches between docs and real-world behavior.

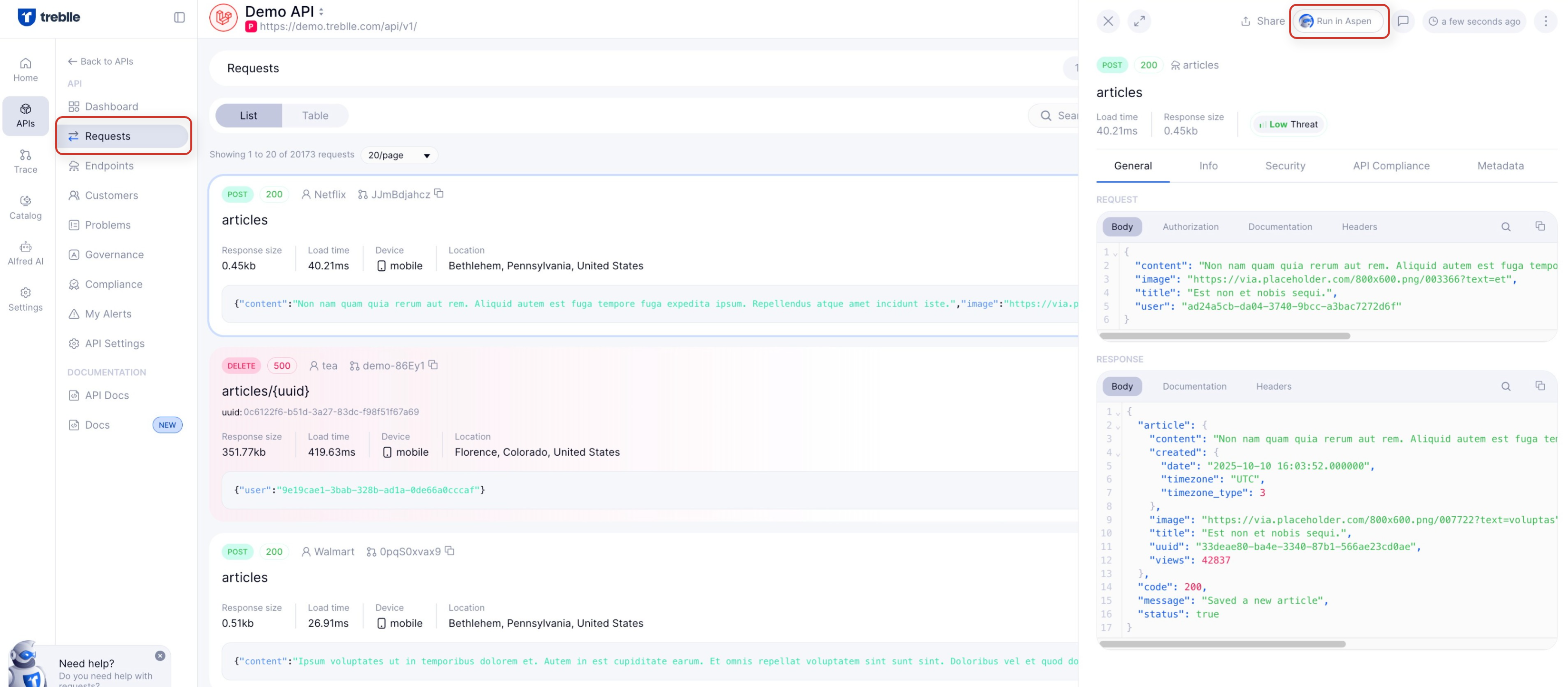

Real-time API observability provides immediate visibility into API behavior, performance metrics, and error conditions. This visibility enables rapid debugging by giving developers complete context for each request, including headers, parameters, response bodies, and timing information.

With 51% of developers now citing unauthorized agent access as a top security risk, observability tools must balance transparency with security. Effective observability platforms provide developers with the diagnostic information they need while maintaining appropriate access controls and data protection.

Actionable insights derived from observability data help development teams identify patterns in API usage and failure modes. Rather than waiting for developers to report issues, teams can proactively detect problems through anomaly detection and performance monitoring. This proactive approach reduces downtime and improves the overall integration experience.

Time to First Call (TTFC) measures the elapsed time from developer registration to their first successful API request. This metric provides a quantifiable indicator of onboarding efficiency and documentation quality. Lower TTFC values correlate with better onboarding experiences and higher adoption rates.

Tracking TTFC at a granular level reveals specific friction points in the onboarding flow. By segmenting TTFC by developer persona, use case, or integration type, teams can identify which scenarios require additional support or documentation improvements. This data-driven approach enables targeted optimizations that deliver measurable results.

Continuous TTFC monitoring establishes baseline performance and tracks the impact of onboarding improvements over time. Teams can validate changes by measuring TTFC before and after implementing documentation updates, workflow changes, or new tooling. This creates a feedback loop that drives systematic improvement in developer experience.

API visibility tools like Treblle capture detailed request and response data, revealing where developers encounter problems during integration. High error rates on specific endpoints, repeated failed authentication attempts, or unusual request patterns all indicate potential bottlenecks that require attention.

Performance metrics like response time, throughput, and error rates help teams prioritize optimization efforts. By identifying slow endpoints or unreliable services, teams can address issues before they significantly impact developer experience. Real-time alerting ensures teams respond quickly to degraded performance or service disruptions.

Usage pattern analysis reveals how developers actually use the API versus how it was intended to be used. This insight often uncovers missing features, confusing workflows, or documentation gaps. By understanding real usage patterns, teams can refine both the API design and supporting documentation to better match developer needs.

Interactive API testing environments allow developers to experiment with API endpoints without local setup or configuration. Aspen, Treblle’s free API testing tool, offers live pre-configured sandbox environments where developers can execute requests, examine responses, and understand API behavior through direct interaction.

These environments reduce the barrier to first success by eliminating setup friction. Developers can validate their understanding of API functionality before investing time in local integration. This hands-on approach accelerates learning and builds confidence in the API's capabilities.

Aspen provides a browser-based API testing interface that requires no installation or configuration. Developers can construct requests, execute them against the API, and examine detailed responses immediately. This zero-setup approach removes technical barriers that often delay initial API exploration.

The tool supports complex request construction, including custom headers, authentication schemes, and request bodies. Developers can save request collections for reuse and share them with team members, facilitating collaboration during integration. Request history and response caching enable quick iteration and comparison.

Aspen integrates with API documentation, allowing developers to execute example requests directly from documentation pages. This seamless connection between documentation and testing reduces context switching and accelerates the path from reading about an endpoint to successfully calling it.

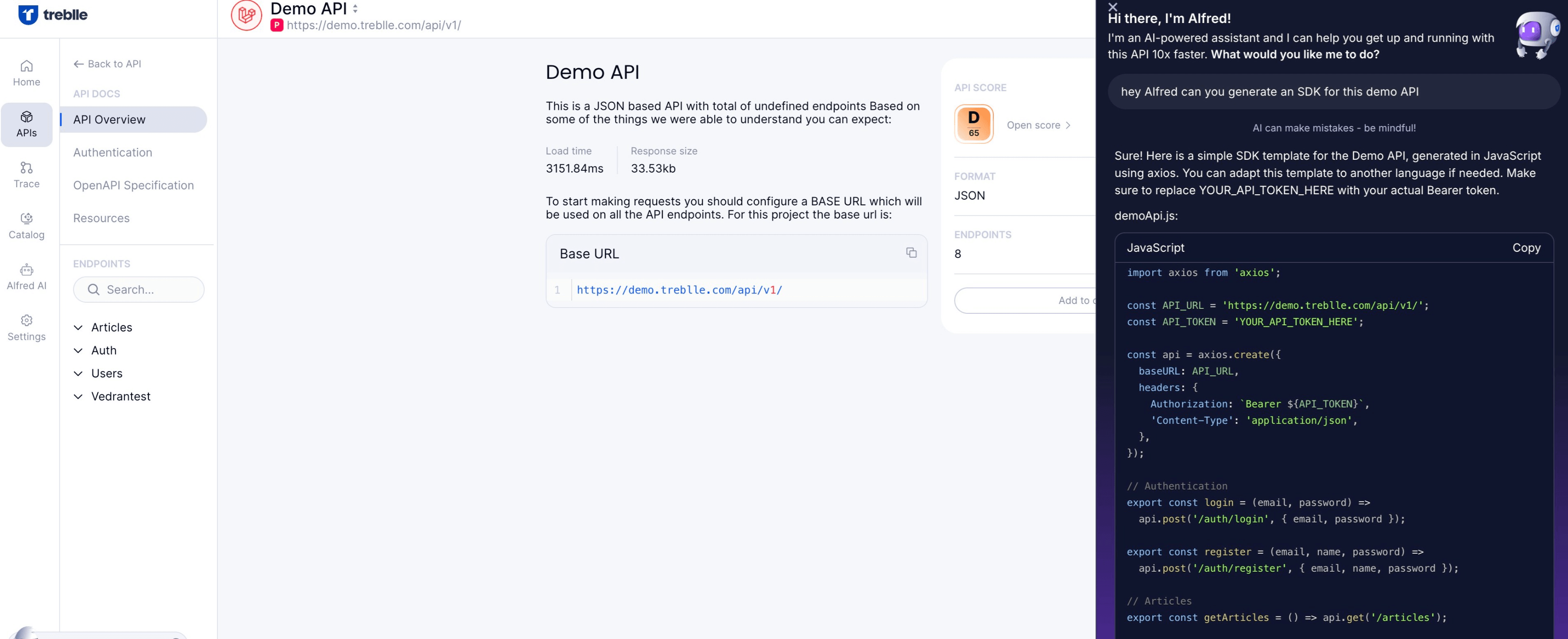

Manually maintaining SDKs across multiple programming languages creates significant overhead for API providers. Each language requires different idioms, error handling patterns, and package management approaches. AI-powered SDK generation automates this process by producing idiomatic client libraries from API specifications.

Automated code generation ensures consistency across all supported languages. When the API changes, all SDKs update simultaneously, eliminating version skew between different client libraries. This consistency reduces confusion and ensures developers can rely on current, accurate implementations.

Code snippet generation provides immediately usable examples for common integration tasks. Rather than translating generic documentation into specific implementations, developers receive working code that demonstrates proper API usage in their target language. This acceleration is particularly valuable during initial integration when developers are learning the API's patterns.

Alfred AI generates client libraries by analyzing OpenAPI specifications and API traffic patterns. The system produces idiomatic code for each target language, following language-specific conventions for error handling, async operations, and data serialization. Generated libraries include comprehensive type definitions and inline documentation.

The AI adapts libraries based on actual usage patterns observed in production traffic. Common request patterns become convenience methods, while rarely-used combinations remain accessible through lower-level interfaces. This usage-informed approach creates client libraries that match real-world integration requirements.

Generated SDKs maintain synchronization with API changes through continuous monitoring. When endpoints are modified or added, Alfred regenerates affected portions of each client library. This automation ensures SDKs remain current without manual maintenance effort.

Standardized API patterns enable AI tools to generate accurate code and understand API functionality. RESTful conventions, consistent error formats, and predictable resource naming help AI systems reason about API behavior.

Several reports indicate that while 70% of developers are aware of the Model Context Protocol (MCP) for AI-API integration, only 10% use it regularly, suggesting a significant opportunity for standardization.

OpenAPI specifications provide machine-readable API descriptions that AI systems can consume. Comprehensive specifications that include examples, parameter constraints, and error responses enable AI tools to generate more accurate code. APIs with detailed specifications have better AI-generated integration code compared to those with minimal documentation.

Consistent authentication patterns, error handling, and response formats reduce the complexity AI systems must handle. By adhering to established conventions, APIs become more predictable, making them easier for AI tools to understand and generate code against. This standardization benefits both AI-assisted and manual integration approaches.

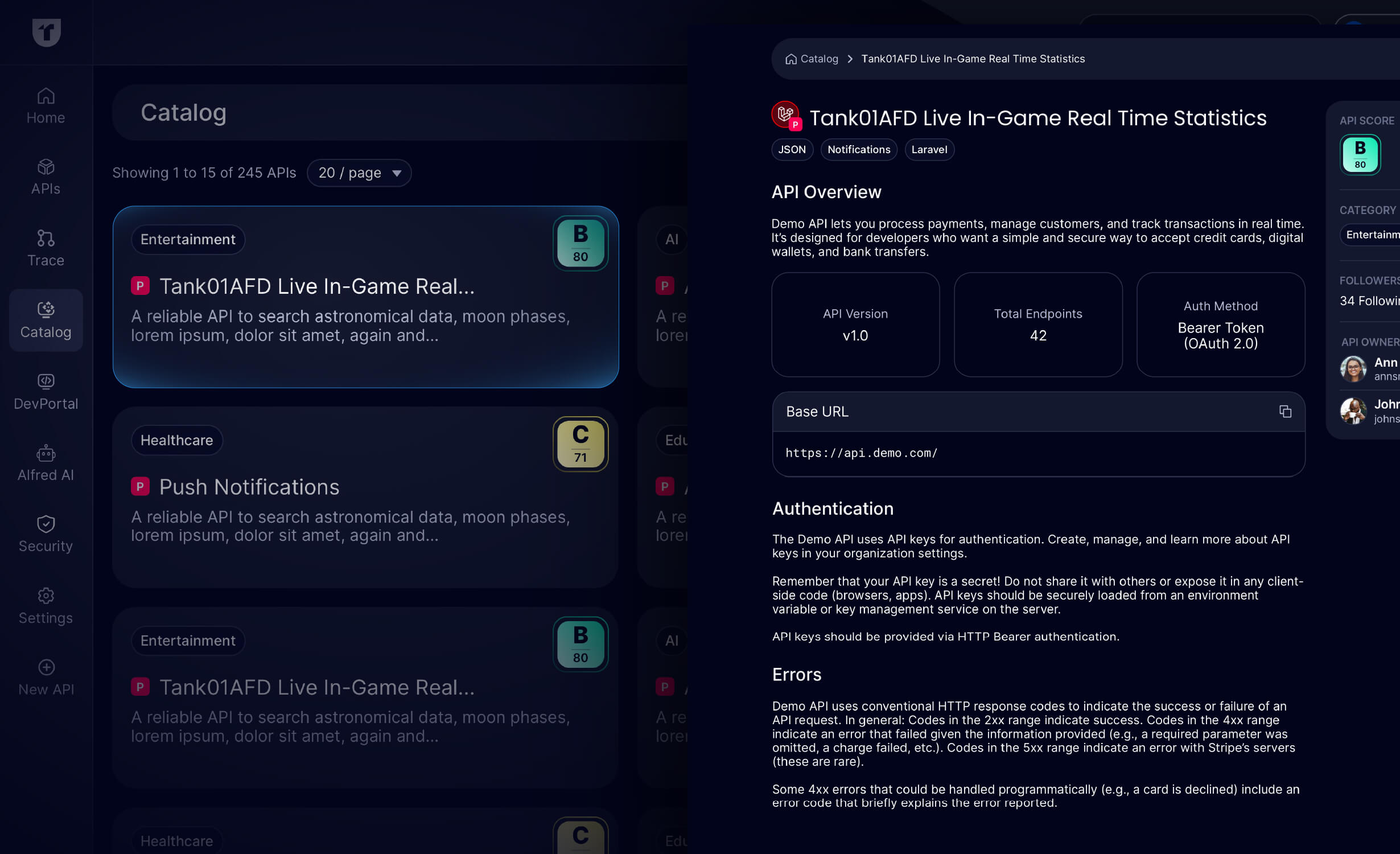

Centralized API catalogs provide a unified interface for discovering and accessing all available APIs within an organization or ecosystem. These catalogs aggregate documentation, specifications, and usage examples, giving developers a single starting point for API exploration.

With 43% of organizations now generating revenue from their API programs, effective discoverability directly impacts business outcomes. Developers who struggle to find or understand available APIs will seek alternatives or build redundant functionality, which increases costs and reduces efficiency.

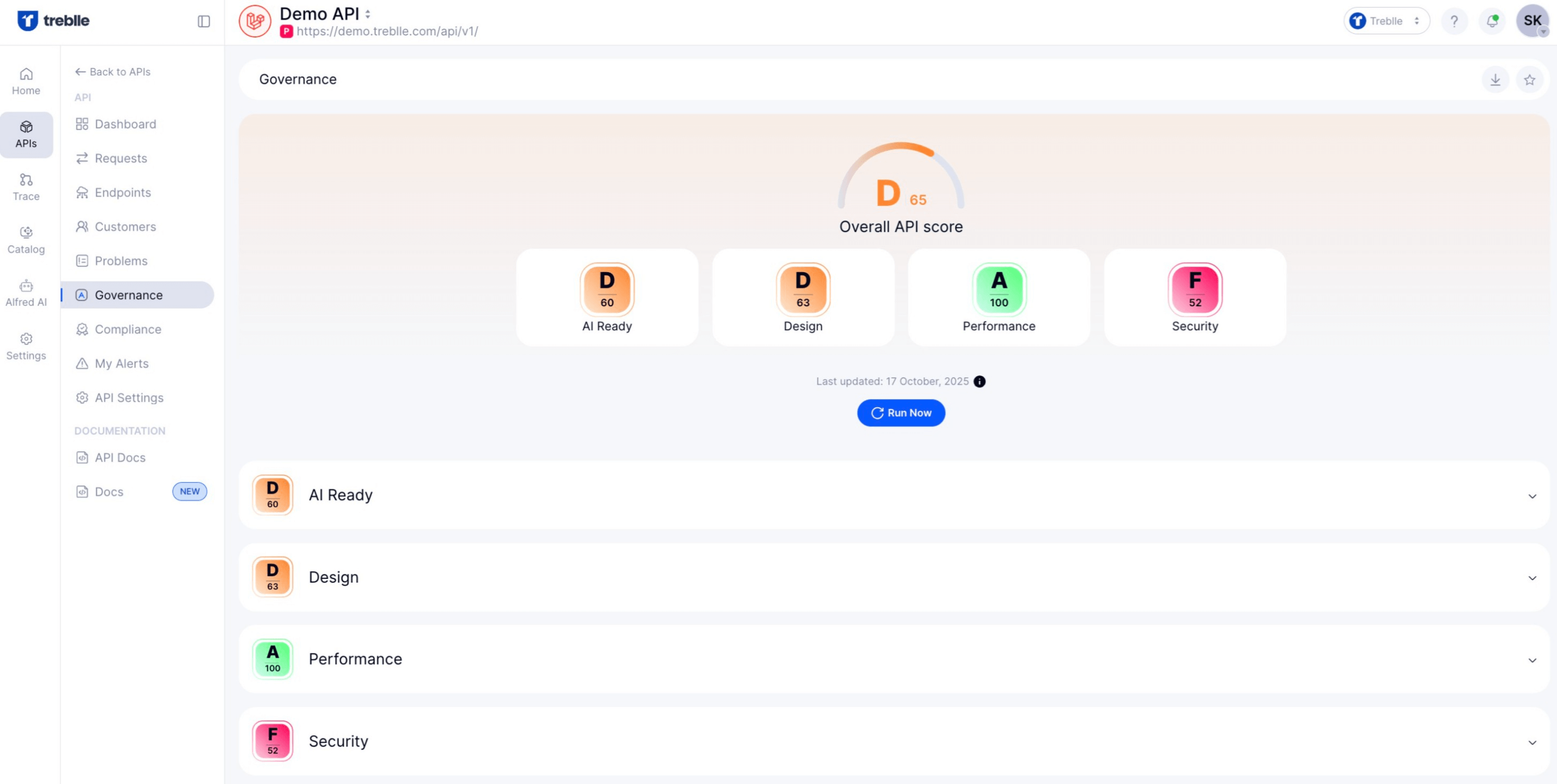

Governance frameworks ensure APIs meet quality, security, and consistency standards before publication. Automated quality scoring helps developers identify reliable, well-documented APIs and avoid those with known issues. This quality signal reduces integration risk and guides developers toward better choices.

Bring policy enforcement and control to every stage of your API lifecycle.

Treblle helps you govern and secure your APIs from development to production.

Explore Treblle

Bring policy enforcement and control to every stage of your API lifecycle.

Treblle helps you govern and secure your APIs from development to production.

Explore Treblle

Searchable API catalogs enable developers to find relevant APIs through keyword search, category browsing, and tag-based filtering. Effective search considers not just API names but also functionality, use cases, and domain concepts. Natural language search enables developers to discover APIs even when they're unsure of the exact terminology.

Catalog entries should include comprehensive metadata: API version, authentication requirements, rate limits, SLA commitments, and support contacts. Developers need this information to make informed integration decisions. Usage examples and integration guides within catalog entries reduce the time from discovery to first successful call.

Self-service provisioning allows developers to obtain API credentials and access without manual approval processes. Automated provisioning reduces friction and accelerates onboarding while maintaining appropriate security controls through policy-based access management.

API scoring systems evaluate APIs across multiple dimensions: documentation completeness, test coverage, performance characteristics, security posture, and breaking change frequency. Scores provide an at-a-glance quality indicator that helps developers assess integration risk.

Automated scoring evaluates APIs continuously, flagging degradation in quality metrics. Documentation completeness can be measured by checking for required sections, code examples, and error documentation. Security scoring assesses authentication mechanisms, encryption standards, and known vulnerabilities.

Public scores create accountability and incentivize API quality improvements. Teams can track score trends over time and set quality gates that prevent low-scoring APIs from reaching production. This systematic approach to quality management improves the overall developer experience across an API portfolio.

Observability data enables personalized onboarding experiences tailored to each developer's behavior and progress. By tracking which documentation pages developers visit, which endpoints they call, and where they encounter errors, systems can provide targeted guidance and resources.

Personalization reduces cognitive load by presenting relevant information at the right time. Instead of overwhelming developers with comprehensive documentation upfront, personalized systems progressively disclose information as developers advance through integration stages. This staged approach matches information delivery to actual needs.

Behavioral analytics identify common integration patterns and friction points. By understanding typical developer journeys, teams can optimize critical paths and provide proactive support at known difficulty points. This data-driven approach to developer experience creates more effective onboarding flows.

API analytics track developer progression through onboarding stages: registration, first API call, successful integration, and production deployment. By measuring conversion rates between stages, teams identify where developers abandon the onboarding process and implement targeted interventions.

Segmentation by developer experience level, use case, or organization type enables customized onboarding paths. Experienced developers may prefer minimal guidance and direct access to comprehensive documentation, while novices benefit from structured tutorials and step-by-step workflows. Analytics data reveals which segments require which approach.

A/B testing of onboarding variations provides empirical data on what works. Teams can test different documentation structures, tutorial approaches, or tool integrations and measure impact on TTFC and completion rates. Continuous optimization based on analytics data drives measurable improvements in developer experience.

Effective API onboarding requires systematic attention to documentation quality, debugging tools, testing environments, automation, and continuous optimization. Organizations that excel in these areas see faster developer adoption, reduced support costs, and higher API engagement.

Treblle's API Intelligence Platform addresses these requirements through automated documentation, real-time observability, AI-assisted development tools, and comprehensive analytics. By eliminating manual documentation maintenance and providing instant visibility into API behavior, Treblle enables development teams to focus on building features rather than supporting integration.

The combination of AI-powered tools and observability-driven insights creates a sustainable approach to developer experience. As APIs evolve and developer expectations increase, platforms that automate routine tasks and provide intelligent assistance will deliver competitive advantages in adoption speed and developer satisfaction.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

AI automates documentation generation, creates context-aware code examples, and generates multi-language SDKs from API specifications. These capabilities reduce manual maintenance overhead and provide developers with immediately usable resources, significantly reducing time to first integration.

Over 80% of developers report that documentation quality influences their decision to adopt an API. Clear documentation reduces onboarding time, minimizes integration errors, and decreases support burden, directly impacting adoption rates and developer satisfaction.

TTFC measures the time from developer registration to their first successful API request. This metric indicates onboarding efficiency and documentation quality. Lower TTFC correlates with better developer experience and higher adoption rates. Organizations use TTFC to identify and eliminate onboarding friction.

Live sandboxes eliminate local setup requirements and allow developers to test API functionality immediately. By providing pre-configured environments that simulate production behavior, sandboxes enable developers to validate their understanding and build confidence before committing to full integration.

Real-time observability provides complete context for each API request, including headers, parameters, and response data. This visibility enables rapid problem diagnosis and resolution. Instead of waiting for support responses, developers can independently debug issues, reducing friction and accelerating integration progress.

AI

AIModern APIs are powerful but difficult to integrate. Poor documentation, fragile code, and tool sprawl slow teams down. This article explores how AI is transforming API integration—automating onboarding, testing, and governance to boost speed, reliability, and scale.

AI

AILLMs are calling your APIs, but they don’t always follow the rules. From subtle JSON mismatches to silent data pollution, probabilistic outputs can quietly break your backend. Here’s why contracts matter, and how to enforce them in real time.

AI

AIModern APIs fail in all kinds of ways, but AI is changing how we detect, debug, and fix those failures. This article breaks down common API errors and shows how AI tools like Treblle’s Alfred help teams resolve issues faster and keep systems running smoothly.