API Design | Jun 4, 2024 | 10 min read | By Stefan Đokić | Reviewed by Pavle Davitković

Stefan Đokić is a Senior Software Engineer and tech content creator with a strong focus on the .NET ecosystem. He specializes in breaking down complex engineering concepts in C#, .NET, and software architecture into accessible, visually engaging content. Stefan combines hands-on development experience with a passion for teaching, helping developers enhance their skills through practical, focused learning.

In the world of web development, performance is a crucial factor that can make or break an application. One of the most effective strategies to enhance performance is caching. Through caching, we can reduce the load on our servers, decrease response times, and improve the overall user experience.

In this blog post, I will share my experience with optimizing .NET REST APIs using caching, including a couple of practical techniques with code snippets to demonstrate their implementation.

When building REST APIs with .NET, performance optimization is a key consideration. Users expect fast and reliable responses, and slow APIs can lead to frustration and decreased usage. Security is another crucial aspect, and if you’re interested in enhancing your API’s security, be sure to read this deep dive into .NET REST API security.

Caching is a technique that allows us to store frequently accessed data in a temporary storage location, reducing the need to repeatedly fetch the same data from the database or other sources. By leveraging caching, we can significantly improve the performance of our APIs.

You can use various Treblle tools to monitor API performance. To be able to follow, be sure to:

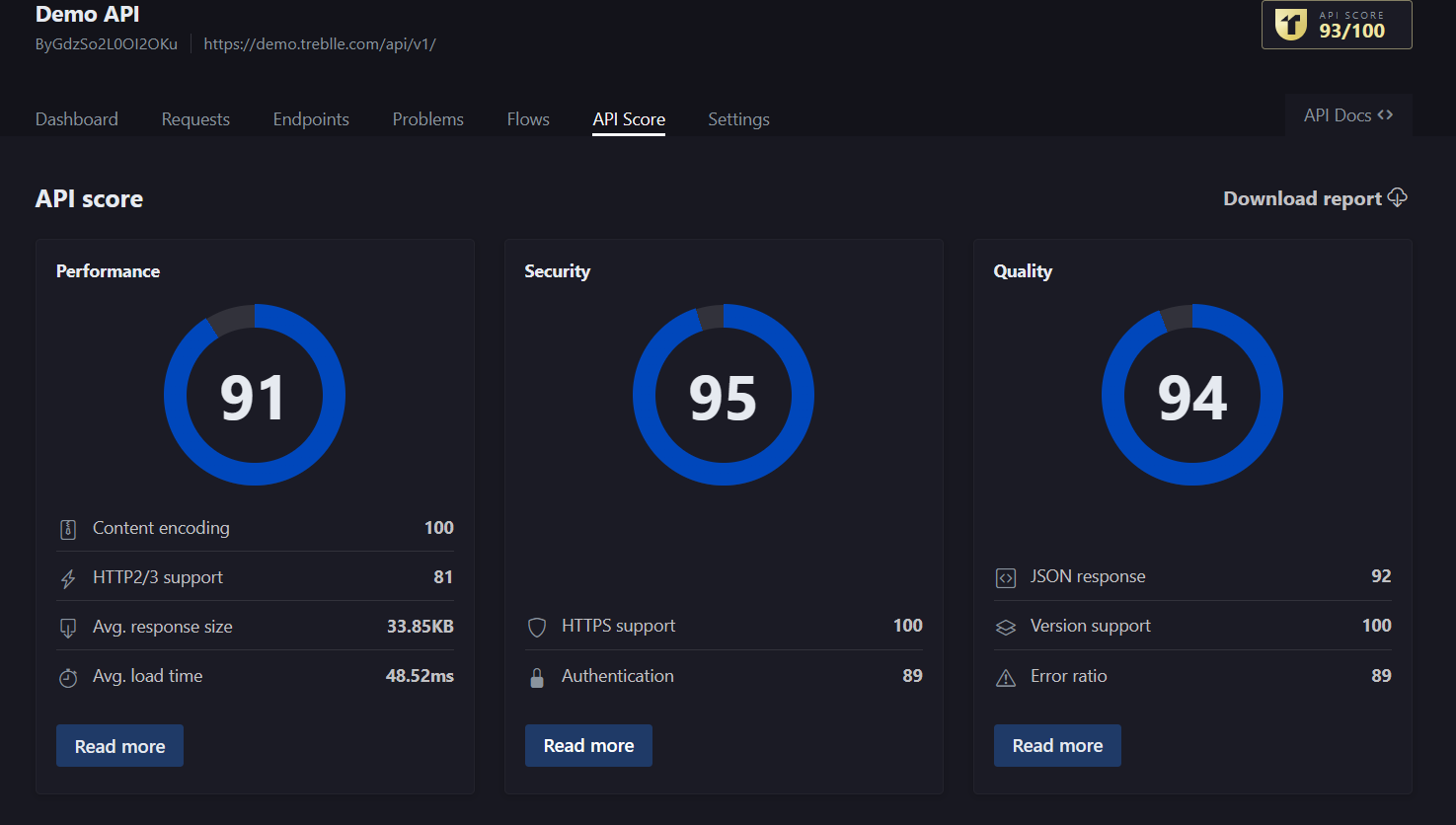

API Score dashboard in Treblle

From the Dashboard in the Treblle Web Monitoring app, you have an availability to see what is the API score of your API. One of the things that is measured is performance. On the "Read more" button you can check all the details.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Okay, let's take a look at some recommendations for caching.

Microsoft gave a nice list of the rules for implementing a cache in the right way:

Code should always have a fallback option to fetch data and not depend on a cached value being available.

The cache uses a scarce resource, memory. Limit cache growth:

Let's take a look at some implementations.

One of the simplest and most effective caching strategies is in-memory caching. In-memory caching stores data directly in the memory of the application server, providing extremely fast access times. This technique is ideal for small to medium-sized datasets that are frequently accessed.

To implement in-memory caching in a .NET REST API, we can use the IMemoryCache interface provided by the Microsoft.Extensions.Caching.Memory package.

Here’s a step-by-step guide to implementing in-memory caching:

First, we need to install the Microsoft.Extensions.Caching.Memory package:

dotnet add package Microsoft.Extensions.Caching.MemoryNext, we need to configure the caching services in our Program.cs file:

builder.Services.AddMemoryCache();Now, we can use the IMemoryCache interface in our controller to cache data.

using Microsoft.AspNetCore.Mvc;

using Microsoft.Extensions.Caching.Memory;

using System;

namespace MyApi.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class ProductsController : ControllerBase

{

private readonly IMemoryCache _memoryCache;

public ProductsController(IMemoryCache memoryCache)

{

_memoryCache = memoryCache;

}

[HttpGet("{id}")]

public IActionResult GetProduct(int id)

{

string cacheKey = $"Product_{id}";

if (!_memoryCache.TryGetValue(cacheKey, out Product product))

{

// Simulate fetching data from a database

product = FetchProductFromDatabase(id);

// Set cache options

var cacheOptions = new MemoryCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromMinutes(10),

SlidingExpiration = TimeSpan.FromMinutes(2)

};

// Save data in cache

_memoryCache.Set(cacheKey, product, cacheOptions);

}

return Ok(product);

}

private Product FetchProductFromDatabase(int id)

{

// Simulate database fetch

return new Product { Id = id, Name = "Sample Product", Price = 99.99m };

}

}

public class Product

{

public int Id { get; set; }

public string Name { get; set; }

public decimal Price { get; set; }

}

}In this example, we first check if the product is already in the cache. If it is not, we fetch it from the database (simulated here) and then store it in the cache with specific cache options.

From my experience, IMemoryCache in .NET is great for quickly boosting performance because it stores data in memory, making it easy to access. However, it's limited by the amount of memory on the server and doesn't work well in distributed systems since it doesn't keep data if the application restarts. For larger applications or those needing to share data across multiple servers, I've found that using something like Redis is a better option.

For larger applications or distributed systems, in-memory caching might not be sufficient. In such cases, we can use distributed caching.

Redis is a popular choice for distributed caching due to its high performance and scalability.

Let's see how to implement it.

First, we need to install the Microsoft.Extensions.Caching.StackExchangeRedis package:

dotnet add package Microsoft.Extensions.Caching.StackExchangeRedisbuilder.Services.AddStackExchangeRedisCache(options =>

{

options.Configuration = "localhost:6379";

options.InstanceName = "SampleInstance";

});IDistributedCache in your controllerNow, we can use the IDistributedCache interface in our controller to cache data:

using Microsoft.AspNetCore.Mvc;

using Microsoft.Extensions.Caching.Distributed;

using System;

using System.Text.Json;

using System.Threading.Tasks;

namespace MyApi.Controllers

{

[ApiController]

[Route("api/[controller]")]

public class ProductsController : ControllerBase

{

private readonly IDistributedCache _distributedCache;

public ProductsController(IDistributedCache distributedCache)

{

_distributedCache = distributedCache;

}

[HttpGet("{id}")]

public async Task<IActionResult> GetProduct(int id)

{

string cacheKey = $"Product_{id}";

var productJson = await _distributedCache.GetStringAsync(cacheKey);

Product product;

if (string.IsNullOrEmpty(productJson))

{

// Simulate fetching data from a database

product = FetchProductFromDatabase(id);

// Save data in cache

productJson = JsonSerializer.Serialize(product);

var cacheOptions = new DistributedCacheEntryOptions

{

AbsoluteExpirationRelativeToNow = TimeSpan.FromMinutes(10),

SlidingExpiration = TimeSpan.FromMinutes(2)

};

await _distributedCache.SetStringAsync(cacheKey, productJson, cacheOptions);

}

else

{

product = JsonSerializer.Deserialize<Product>(productJson);

}

return Ok(product);

}

private Product FetchProductFromDatabase(int id)

{

// Simulate database fetch

return new Product { Id = id, Name = "Sample Product", Price = 99.99m };

}

}

public class Product

{

public int Id { get; set; }

public string Name { get; set; }

public decimal Price { get; set; }

}

}In this example, we use the IDistributedCache interface to cache the product data in Redis. We serialize the product to JSON before storing it in the cache and deserialize it when retrieving it from the cache.

From my experience, IDistributedCache is excellent for applications that need to share cached data across multiple servers. It provides better scalability and persistence compared to IMemoryCache.

With IDistributedCache, you can use solutions like Redis, which stores data outside the application, ensuring it remains available even after restarts. However, setting it up is more complex and may introduce additional overhead, but the benefits for larger, distributed systems make it worth the effort.

Alongside optimizing performance, managing your API’s versions effectively is critical for scalability and backward compatibility. For more details, check out this article on API versioning in .NET.

Improved Performance:

Reduced Load on Primary Sources:

Scalability:

Cost Efficiency:

Cache Invalidation:

Memory Overhead:

Cache Misses:

Complexity in Multi-Tier Architectures:

Security Risks:

Caching offers significant benefits in terms of performance, scalability, and cost efficiency.

However, it also introduces challenges related to cache invalidation, memory overhead, and complexity in maintaining consistency. By carefully designing and managing caching strategies, developers can harness the power of caching while mitigating its drawbacks.

Also, if you need to optimize your .NET API from some other perspective, be sure to read the following article I wrote on performance optimization techniques for .NET APIs.

And yes, don't forget to monitor your APIs, because that's the only way you'll find out why and what you need to optimize.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

API Design

API DesignDiscover the 13 best OpenAPI documentation tools for 2026. Compare top platforms like Treblle, Swagger, and Redocly to improve your DX, eliminate documentation drift, and automate your API reference.

API Design

API DesignAPI authorization defines what an authenticated user or client can do inside your system. This guide explains authorization vs authentication, breaks down RBAC, ABAC, and OAuth scopes, and shows how to implement simple, reliable access control in REST APIs without cluttering your codebase.

API Design

API DesignRate limiting sets hard caps on how many requests a client can make; throttling shapes how fast requests are processed. This guide defines both, shows when to use each, and covers best practices.