By industry

By initiatives

By industry

By initiatives

Other | Oct 3, 2025 | 12 min read | By Savan Kharod

Savan Kharod works on demand generation and content at Treblle, where he focuses on SEO, content strategy, and developer-focused marketing. With a background in engineering and a passion for digital marketing, he combines technical understanding with skills in paid advertising, email marketing, and CRM workflows to drive audience growth and engagement. He actively participates in industry webinars and community sessions to stay current with marketing trends and best practices.

In September 2025, Jaguar Land Rover (JLR), a UK-based luxury automaker, suffered a major cyberattack that halted its vehicle production globally. This JLR breach disrupted manufacturing for weeks and ultimately led to sensitive data being compromised, underscoring severe vulnerabilities in the company’s cybersecurity posture.

A notorious hacker collective claimed responsibility for the attack, reflecting a broader rise in high-profile breaches targeting the automotive industry.

In this article, we will provide a breakdown and analysis of the JLR breach, detailing what happened, how it occurred, and the lessons about API security that developers, CTOs, and cybersecurity teams can learn to prevent similar incidents.

Take a deeper dive into API security, download The API Security Checklist for free.

Jaguar Land Rover is a global carmaker operating “smart” factories and integrated digital systems for manufacturing, logistics, and customer services. In today’s automotive industry, “everything is connected,” meaning that production lines, enterprise IT, and even customer-facing applications are deeply interlinked.

This connectivity enables efficiency and innovation, but it also creates a broad attack surface if security is not airtight. JLR had invested heavily in IT modernization, including a £800 million contract for cybersecurity and IT support with a major consulting firm; yet, the breach showed that even well-funded defenses can falter against determined adversaries.

JLR is one of the UK’s largest employers and is owned by India’s Tata Motors. Its three UK plants normally produce around 1,000 vehicles per day, so any operational outage has cascading effects. A disruption in one manufacturer can quickly cascade through a just-in-time supply chain, affecting thousands of workers and suppliers. This context helps explain why the breach had such a severe impact.

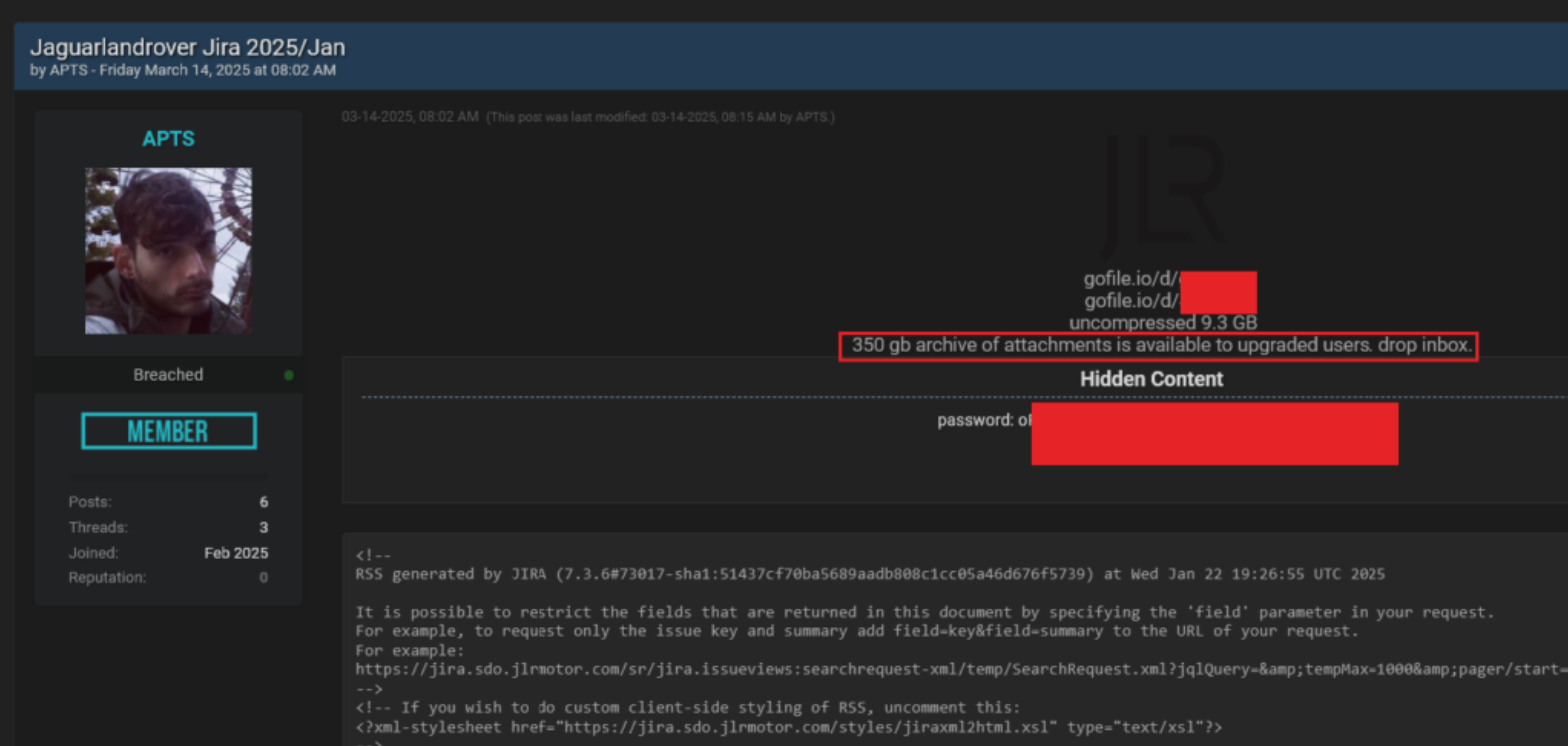

Notably, this was the second major cyber incident JLR faced in 2025. Earlier in March, the Hellcat ransomware group claimed to have infiltrated JLR’s systems, leaking hundreds of gigabytes of internal data, including proprietary documents, source code, and employee records. That attack was reportedly enabled by stolen credentials (harvested via infostealer malware from a third-party with access to JLR’s Jira system).

The exposed data in March even contained development logs and cloud service credentials, raising alarms about potential backdoors for future attacks. This earlier breach was a warning sign that JLR was already on the attackers’ radar and may have had lingering security gaps leading into the September attack.

The first signs of the September 2025 breach emerged quietly at the end of August. Managers at a JLR factory in Halewood, UK, suspected there “might have been a hack” as some systems began acting irregularly.

By September 1, JLR’s IT teams detected an intrusion in its network and took the drastic step of proactively shutting down systems to contain the damage. On September 2, the company publicly confirmed it had been “impacted by a cyber incident” and had paused its operations as a precaution.

What followed was an unprecedented production shutdown. JLR halted manufacturing at all its plants across the UK and in overseas locations (Slovakia, Brazil, India) for multiple weeks. Staff at several factories were instructed to stay home as assembly lines went silent.

This stoppage coincidentally hit during the UK’s “New Plate Day” sales period (when new vehicle registrations surge), compounding the financial losses for JLR and its dealers. Three weeks into the crisis, JLR was still largely incapacitated, unable to produce vehicles at any of its major plants.

The UK government soon acknowledged the “significant impact” of the attack on both JLR and the wider automotive supply chain. Industry estimates suggested JLR might be losing up to £50 million ($67 million) per week in revenue during the shutdown. Meanwhile, hundreds of supplier companies that rely on JLR’s orders were facing turmoil. Some smaller parts manufacturers began laying off workers or cutting pay, and unions warned that thousands of jobs in JLR’s supply chain were at risk if the outage continued.

On the adversary side, a hacker group calling itself “Scattered Lapsus$ Hunters” claimed responsibility for the breach. Almost immediately after JLR’s systems went down, this group boasted on Telegram that they had infiltrated JLR’s network and even shared screenshots of internal systems as proof.

One leaked image, for example, showed an internal Jaguar Land Rover domain (jlrint.com) and details of a backend issue in a JLR infotainment system, indicating deep access into the company’s internal IT environment.

Initially, JLR attempted to downplay the aspect of data theft in the attack. The company stressed in mid-September that there was “no evidence that customer data was stolen.” However, it acknowledged “some data” had been impacted and that regulators were being notified as a precaution. However, as the investigation progressed, JLR had to revise its stance.

By late September, after hackers claimed to have stolen a cache of data, JLR confirmed that a data breach had indeed occurred, with some customer information compromised in the attack.

Jaguar Land Rover has not released a comprehensive technical post-mortem of the September 2025 breach; however, security researchers and leaked information from the attackers themselves provide a clear picture.

The JLR breach breakdown reveals that the intrusion was not the result of a sophisticated zero-day exploit, but rather the execution of well-known tactics: social engineering, credential abuse, weak segmentation, and inadequate detection.

At the heart of the attack was social engineering. The hacker collective responsible has a long history of using phishing and “vishing” (voice phishing) to impersonate insiders. Investigations suggest the breach originated from a targeted vishing campaign weeks earlier, when attackers posing as internal staff tricked employees into disclosing credentials.

Armed with a valid username and password, in some cases with administrator rights, the attackers could log in through normal authentication flows. This meant they didn’t need to brute-force systems or exploit unknown flaws; they simply walked in with stolen keys.

Credentials played a central role in both of JLR’s 2025 incidents. In the March attack, for instance, the Hellcat group compromised a third-party contractor’s Jira/VPN login credentials. That single account gave them access to JLR’s internal project management system, where they quietly extracted hundreds of gigabytes of data.

The September breach appeared to follow the same pattern: once the attackers had access to valid credentials, they encountered few safeguards to prevent them from proceeding.

Multi-factor authentication (MFA) was either missing or inconsistently applied, allowing simple password reuse.

Overly broad permissions meant a single account could access far more data than necessary.

Some credentials were shockingly outdated; reports showed that passwords stolen as far back as 2021 still worked in 2025.

Together, these factors made compromised credentials the “master key” that unlocked critical systems.

After breaching the perimeter, attackers expanded their access through lateral movement. They escalated privileges, navigated through JLR’s IT environment, and eventually reached core infrastructure. Reports indicate that once entrenched, they deployed ransomware or destructive malware, crippling servers and halting factory operations.

The fact that JLR felt forced to disconnect entire systems worldwide, from dealer platforms to factory lines, demonstrates how deeply attackers had penetrated. Instead of isolating a compromised segment, JLR had to hit the emergency brake across its global operations.

The scale of disruption also points to a lack of network segmentation. As a modern automaker, JLR had tightly integrated IT systems with factory automation and logistics. This “everything connected” model maximizes efficiency but leaves no firebreaks against cyberattacks.

Once attackers infiltrated corporate IT, they could potentially pivot toward operational systems, forcing JLR to shut down plants worldwide as a precaution. In a properly segmented environment, breaches in one area should not cascade into total production stoppages.

The attackers were able to exfiltrate massive volumes of data, in one case, an additional 350 GB dump, without immediate detection. This highlights a failure of monitoring and anomaly detection. Abnormal behaviors such as:

A single user account retrieving hundreds of gigabytes of records,

connections tunneling data through Tor,

or sudden traffic spikes from unusual IP ranges,

It should have triggered alarms instantly. Instead, logs were tampered with or deleted by the attackers, and the intrusion continued undetected until systems were already compromised. With real-time anomaly detection, JLR’s teams could have identified and contained the attack before it forced a global shutdown.

The persistence of old and insecure accounts magnified the breach. The fact that an infostealer from 2021 could provide valid credentials in 2025 illustrates a failure in credential lifecycle management. Passwords were not being rotated, expired, or invalidated.

At the same time, multi-factor authentication was not consistently applied to sensitive applications, such as VPNs, Jira, or database consoles. Beyond this, researchers suggested attackers also scanned for unpatched vulnerabilities in JLR’s cloud and database platforms, which could have provided additional entry points. Weak credential management, coupled with slow patching, created multiple avenues for attackers to exploit.

Finally, JLR’s reliance on third-party contractors introduced another weak point. In March, a contractor’s Jira account became the initial foothold for attackers. This reflects a broader supply chain risk: external accounts often have weaker controls, are less closely monitored, and sometimes remain in place long after the contract ends.

Without strict governance, such as enforcing MFA for vendors, continuously auditing external access, and rapidly deprovisioning unused accounts, organizations leave open doors into their systems. For JLR, that door was pried open twice in the same year.

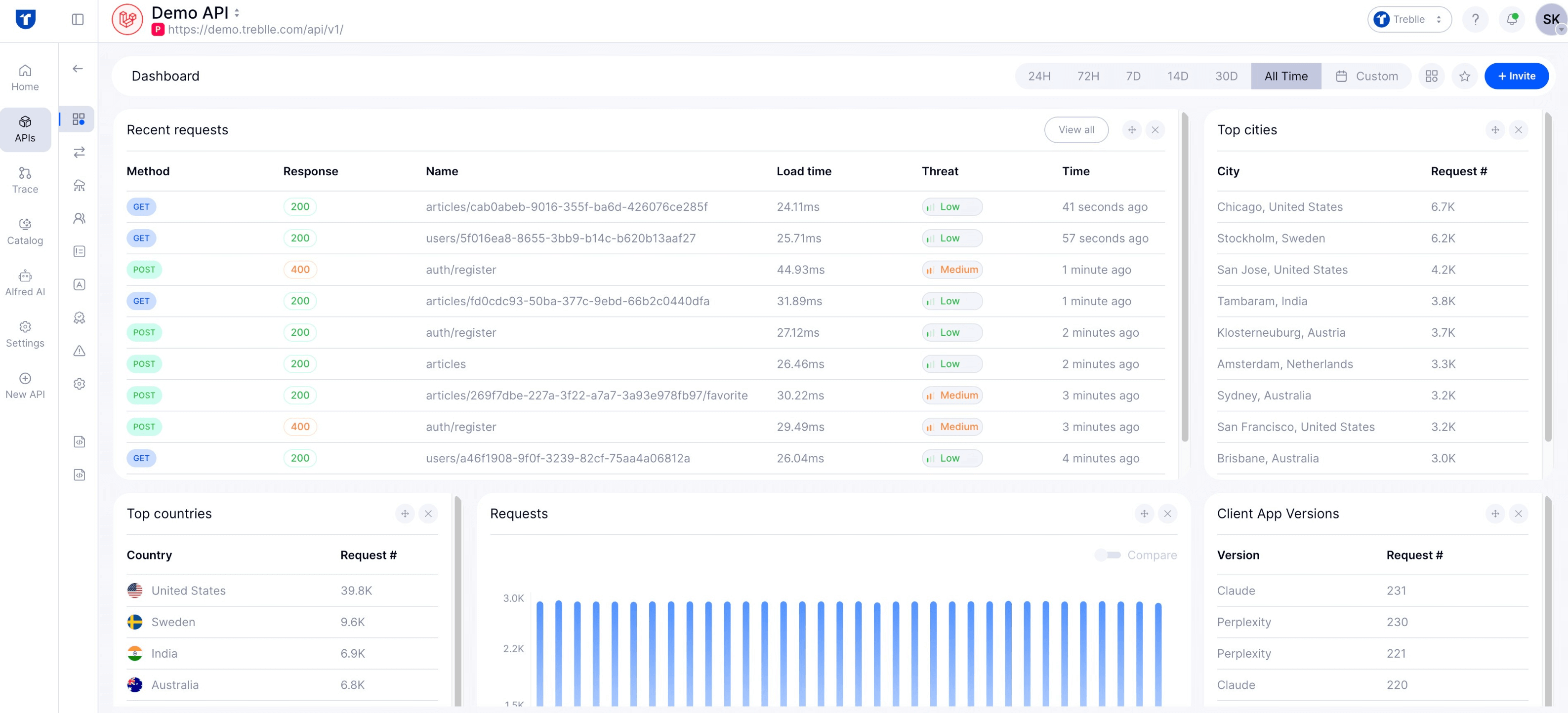

The JLR breach breakdown highlights systemic issues: stolen credentials went undetected, exfiltration of hundreds of gigabytes raised no alarms, and a lack of real-time visibility left the company blind until it was too late. Here’s how Treblle’s platform, across its core products, could have helped prevent or contain the incident:

Treblle’s API Intelligence provides a live map of every API in your environment, along with 50+ data points for each request.

At JLR, attackers were able to abuse internal applications and APIs without raising suspicion. With Treblle, unusual traffic — such as an account suddenly downloading massive data dumps — would have surfaced instantly in dashboards.

API Intelligence also automatically discovers “unknown” APIs. If JLR had undocumented or shadow APIs exposed internally or to suppliers (as suggested by their contractor VPN/Jira leak ), Treblle would have flagged them before they were exploited.

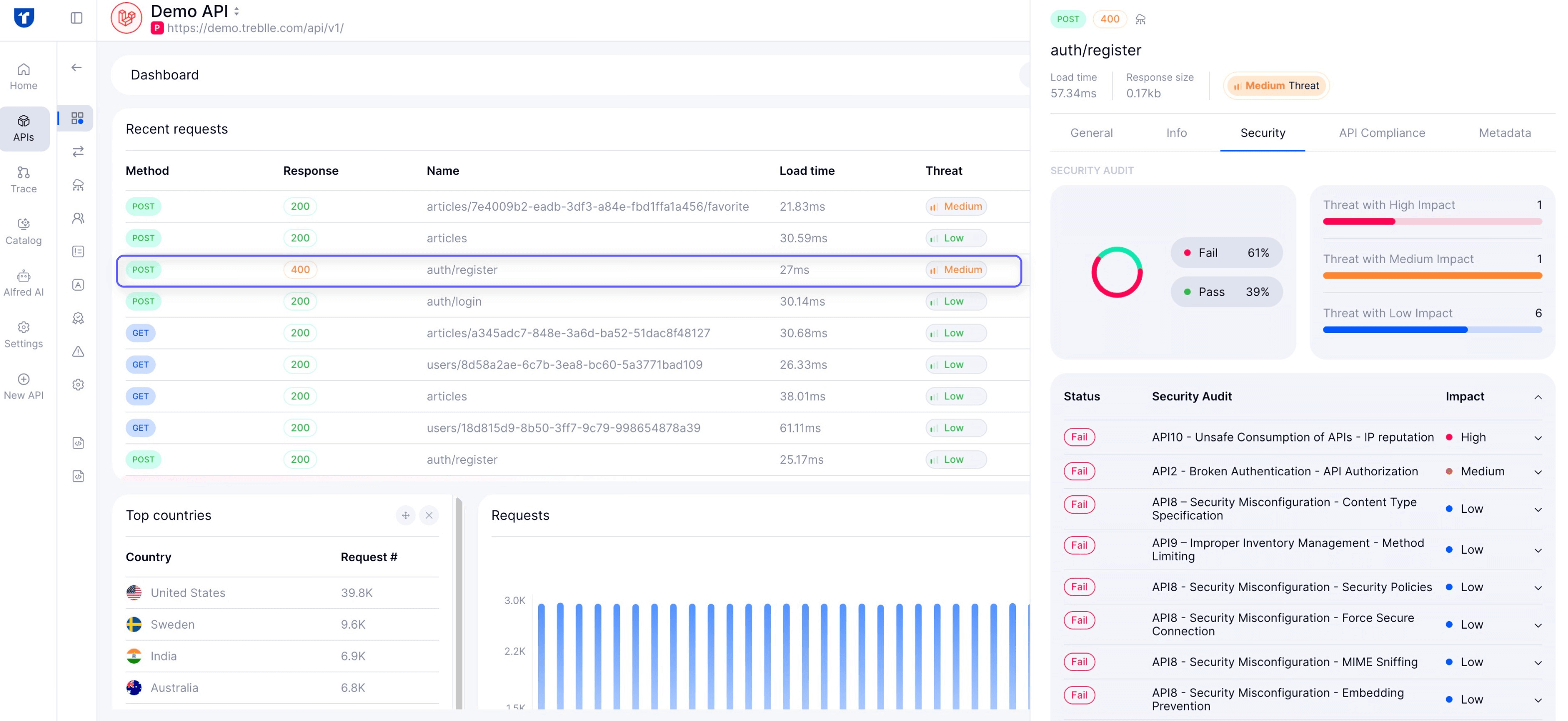

Treblle automatically detects sensitive data in payloads (PII, credentials, payment data) and flags violations of compliance frameworks like GDPR, PCI, and CCPA.

When the Hellcat group leaked JLR’s source code and employee database, Treblle’s security layer could have masked sensitive responses and ensured that secrets (such as API keys, tokens, or passwords) never left logs or responses.

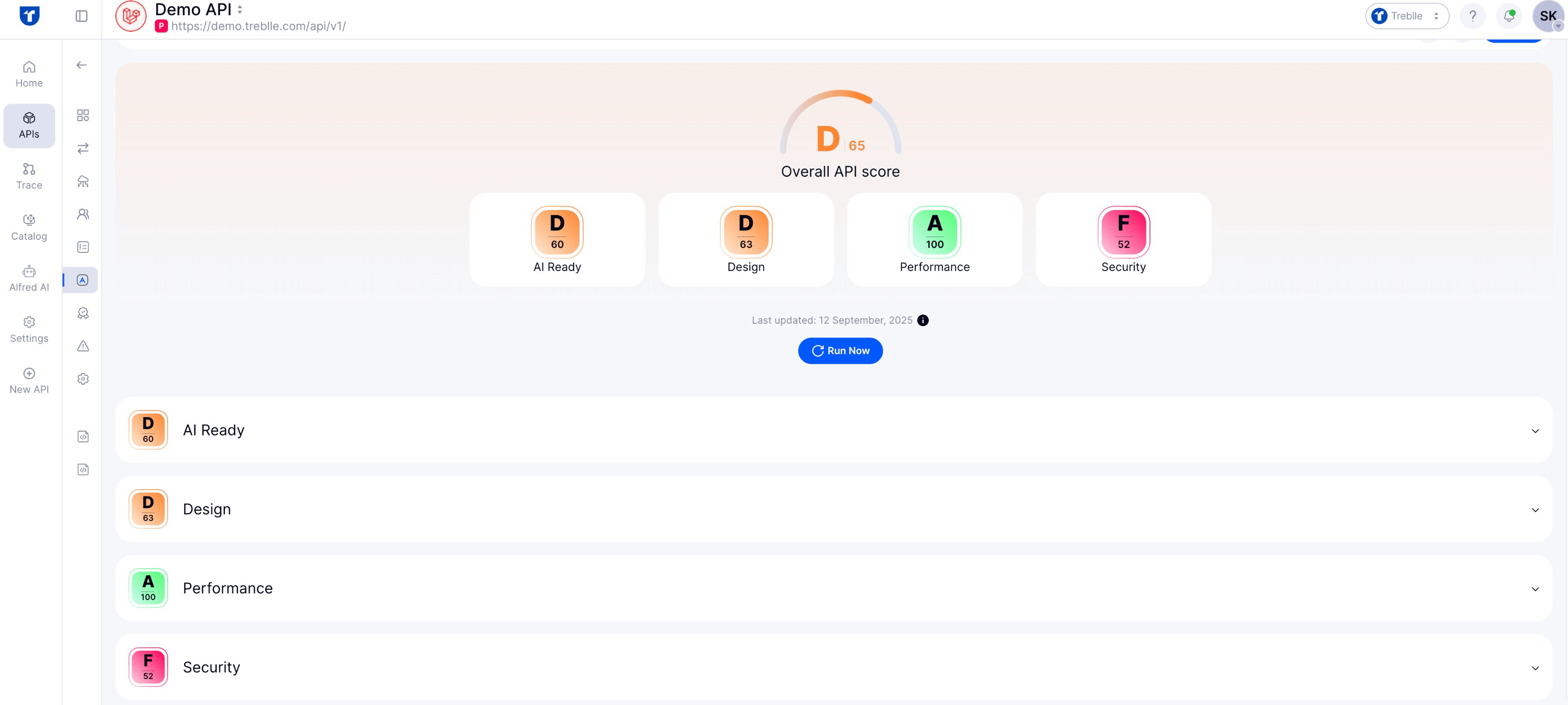

Treblle’s security scoring system grades every API call. If a low-scoring or non-compliant API had been in production at JLR, security teams would have identified it immediately and been able to remediate it before attackers leveraged it.

Treblle enforces design-time and runtime governance: validating contracts, schemas, and standards across teams.

JLR’s attackers exploited overly broad privileges and inconsistent access controls. With Treblle, governance policies (e.g., enforcing least-privilege API contracts, requiring schema validation, and restricting access to certain endpoints) would limit the damage that a single stolen credential could cause.

Governance also ensures that third-party integrations (like suppliers’ Jira/VPN accounts) follow the same security and documentation standards as internal APIs, reducing the risk of a forgotten vendor account becoming a backdoor.

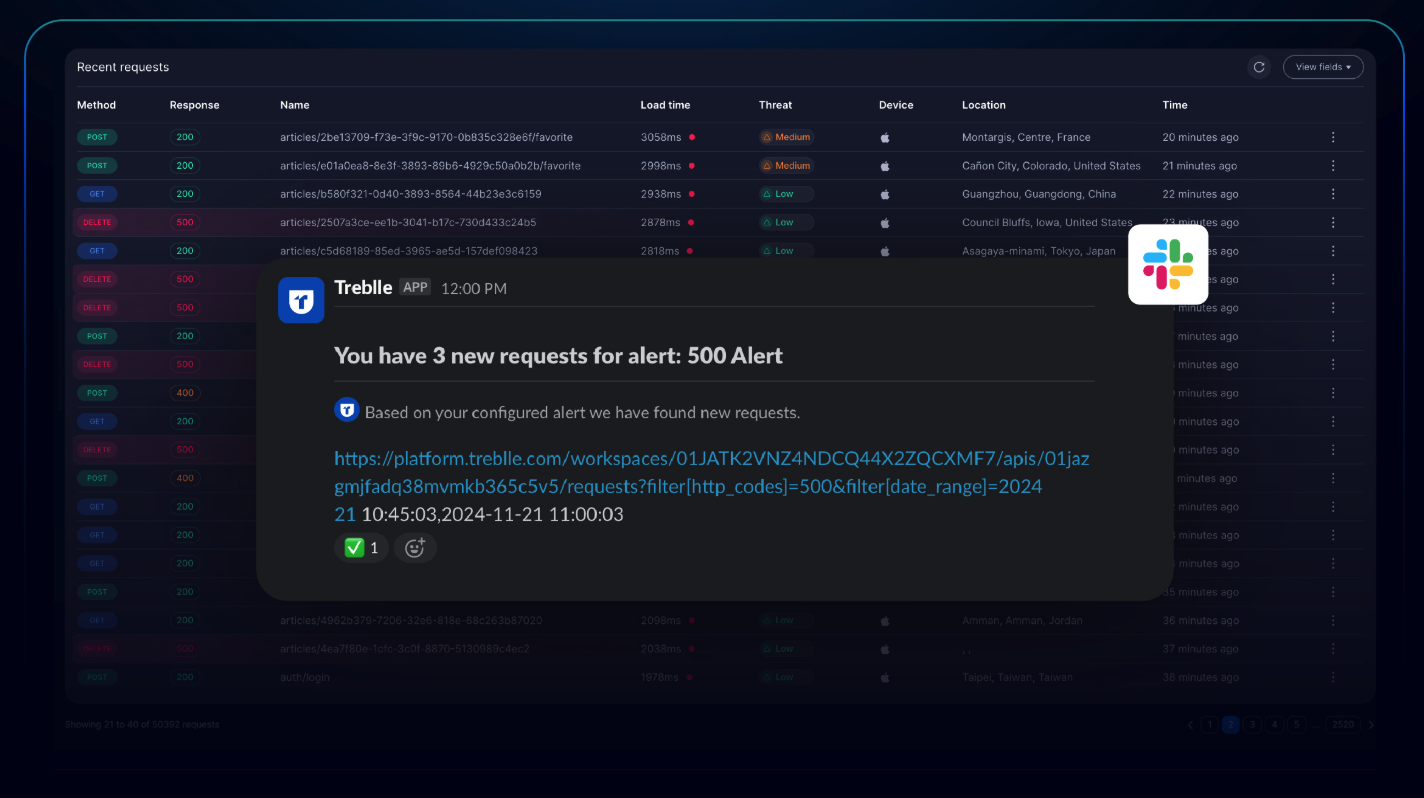

Real-time custom alerts are Treblle’s safety net. If JLR had Treblle alerts configured, their teams would have been paged the moment data exfiltration spiked, or when requests began originating from unusual IP ranges (e.g., Tor exit nodes ).

Instead of discovering the intrusion only after attackers had entrenched themselves, JLR’s SOC could have responded within minutes, potentially stopping production shutdowns and data theft. Alerts can be integrated into Slack, email, and other channels, ensuring the right people are looped in automatically.

The 2025 Jaguar Land Rover breach underscores that even industry giants with substantial security investments can be brought to a standstill by a savvy cyberattack. For developers, CTOs, and security teams worldwide, this incident serves as a stark reminder of the importance of getting the fundamentals of cybersecurity right.

A combination of human error, inadequate internal controls, and delayed detection allowed a single intrusion to escalate into a crisis that impacted global operations.

Crucially, the JLR breach is not an isolated case. In recent years, we’ve seen similar patterns in other high-profile API and data breaches, from fintech services to other automakers, where a misconfigured API or a lack of proactive monitoring opened the door to attackers. These incidents underscore the risks associated with inadequate API governance and oversight.

Organizations must take a holistic approach to API security, treating their internal APIs and integrations with the same rigor as public-facing ones. Real-time observability, strict authentication, and compliance checks are no longer optional luxuries; they are essential safeguards for any modern enterprise handling sensitive data.

By learning from the JLR breach breakdown and implementing the preventative measures outlined above, companies can better protect themselves from being the next victim. Cyberattacks will continue to evolve, but a robust, well-monitored, and security-conscious infrastructure, combined with an alert and educated workforce, is the best defense against even the most determined adversaries.

In short, staying vigilant and proactive is key to ensuring that a breach of this magnitude “never stops the assembly line” at your organization.

Protect your APIs from threats with real-time security checks.

Treblle scans every request and alerts you to potential risks.

Explore Treblle

Protect your APIs from threats with real-time security checks.

Treblle scans every request and alerts you to potential risks.

Explore Treblle

Other

OtherHackers breached FIA’s driver portal, exposing Max Verstappen and other F1 drivers’ data. In this article, we covered a detail breakdown, security takeaways, and how it could have been stopped.

Other

OtherOn June 26, 2025, we hosted a webinar with API security expert Colin Domoney and Treblle’s Vedran Cindrić to unpack what really breaks API security. Here are the key takeaways, including real breach examples, common myths, and a practical security checklist.

Other

OtherMissed the webinar? Here are the top takeaways from “The Future Is Federated,” where Daniel Kocot, Vedran Cindrić, and Harsha Chelle shared practical strategies for scaling API governance in complex, fast-moving environments.