AI | May 27, 2025 | 14 min read | By Savan Kharod

Savan Kharod works on demand generation and content at Treblle, where he focuses on SEO, content strategy, and developer-focused marketing. With a background in engineering and a passion for digital marketing, he combines technical understanding with skills in paid advertising, email marketing, and CRM workflows to drive audience growth and engagement. He actively participates in industry webinars and community sessions to stay current with marketing trends and best practices.

The Model Context Protocol (MCP) has emerged as a pivotal standard, enabling seamless integration between AI models and external tools or data sources. Selecting the best MCP servers is essential for developers aiming to build intelligent, context-aware AI applications.

This comprehensive guide goes beyond a mere list of options. It delves into the evaluation criteria essential for choosing the right MCP server, explores practical use cases, and offers deployment tips to help you make informed decisions.

Gain visibility, control, and alignment across your API landscape, download API Governance Checklist for free.

Whether you're a developer, data engineer, or AI enthusiast, reading this guide on MCP servers will empower you to build more effective AI-ready applications.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

As AI applications become increasingly sophisticated, the Model Context Protocol (MCP) has emerged as a pivotal standard for integrating large language models (LLMs) with external tools and data sources.

Selecting the right MCP server is crucial for building intelligent, context-aware systems. Here are the key factors to consider:

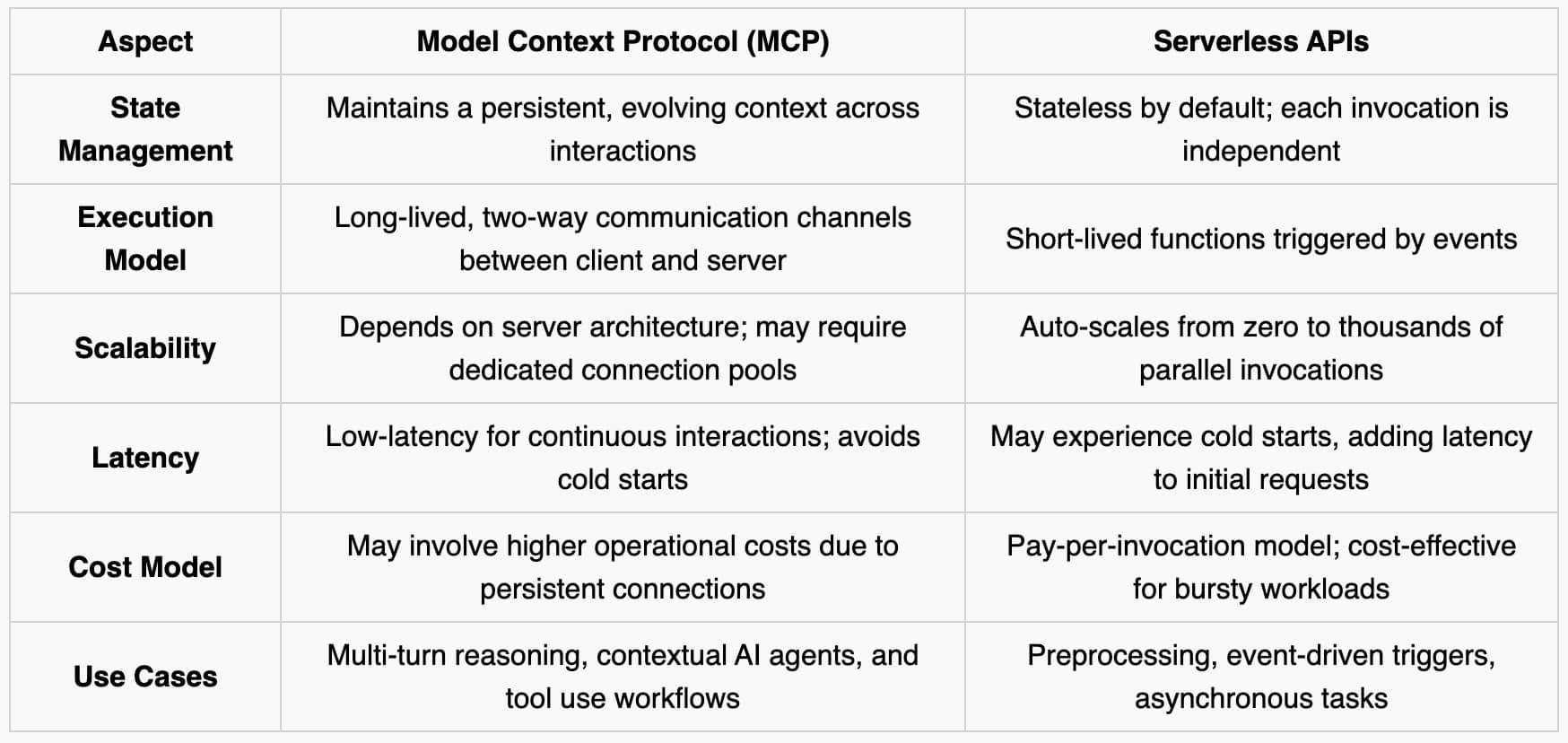

createMCPClient for seamless tool retrieval and integration with various LLMs.In AI application development, Model Context Protocol (MCP) and Serverless APIs offer unique advantages. While MCP provides structured and evolving context to AI models, Serverless APIs offer event-driven, scalable execution. Rather than being competitive, these two architectures are often complementary, each addressing different aspects of AI application needs.

Before we dive into the comparison further, let’s set the base right. For a broader perspective on how MCP stacks up against classic REST-based systems, check out this detailed comparison between MCP and traditional APIs.

Here is the shorter explanation of each term:

Now that you know what each term means, let’s dive further into their differences:

In practice, MCP and Serverless APIs can be integrated to leverage the strengths of both architectures:

Want a side-by-side breakdown of when to use MCP and when to use Serverless APIs? This post explores their unique strengths and when to use each.

| MCP Server | Key Features | Best Use Cases | Deployment Tips |

|---|---|---|---|

| LangChain MCP | - Seamless integration with LangChain agents - Supports dynamic tool invocation - Facilitates multi-turn conversations | - Developing AI chatbots with memory - Building AI-driven workflows requiring context awareness | - Utilize LangChain's documentation for setup - Leverage community plugins to extend functionality |

| Vercel AI SDK MCP | - Easy integration with Vercel's serverless infrastructure - Supports real-time data fetching and context updates - Built-in support for popular LLMs | - Creating AI-powered web applications with dynamic content - Implementing context-aware features in existing applications | - Deploy using Vercel's platform for optimal performance - Utilize the AI SDK's documentation for best practices |

| AutoGPT MCP Backend | - Enables autonomous AI agents to interact with external tools and APIs - Supports goal-oriented AI behavior - Allows for dynamic tool selection and usage | - Developing autonomous AI agents for task automation - Implementing AI systems requiring adaptive tool usage | - Ensure secure API access and authentication mechanisms - Monitor agent behavior to prevent unintended actions |

| Cloudflare Workers-Based MCP | - Global distribution for reduced latency - Scalable infrastructure to handle varying workloads - Built-in security features for safe execution | - Building AI applications requiring real-time responses - Deploying context-aware features closer to end-users | - Leverage Cloudflare's KV storage for context persistence - Utilize Wrangler CLI for streamlined deployment |

| GitHub MCP Server | - Access to repository metadata and content - Ability to create, update, and comment on issues and PRs - Supports authentication via GitHub tokens | - Automating code review processes - Implementing AI assistants for developer workflows | - Ensure proper permission scopes for GitHub tokens - Monitor API rate limits to prevent throttling |

| Google Calendar MCP Plugin | - Create, update, and delete calendar events - Access to event details and attendee information - Supports multiple calendars and time zones | - Developing AI scheduling assistants - Integrating calendar functionalities into AI workflows | - Set up OAuth 2.0 credentials for secure access - Handle time zone conversions appropriately |

| PrivateContext (Self-Hosted Secure MCP) | - End-to-end encryption for data in transit and at rest - Role-based access control for fine-grained permissions - Audit logging for monitoring interactions | - Handling confidential data in AI applications - Complying with strict data protection regulations | - Regularly update security patches and monitor logs - Implement network segmentation for added protection |

| Node.js + OpenAPI-Based Custom MCP | - Complete control over API endpoints and behaviors - Integration with existing Node.js ecosystems - Scalability through modular architecture | - Developing bespoke AI tools with unique requirements - Integrating with legacy systems or specialized APIs | - Utilize frameworks like Express.js for rapid development - Document APIs thoroughly using OpenAPI specifications |

| Browser-Native MCPs for Client-Side Memory | - Local storage of context data - Reduced latency by eliminating server round-trips - Enhanced user privacy with data staying on-device | - Developing AI applications for environments with limited connectivity - Implementing privacy-focused AI tools | - Ensure compatibility across different browsers - Implement data synchronization strategies for offline scenarios |

| Hugging GPT-Style Unified MCP Endpoints | - Centralized access to diverse AI capabilities - Simplified integration for complex workflows - Scalable architecture for expanding toolsets | - Building comprehensive AI platforms with varied functionalities - Facilitating multi-modal AI applications | - Design modular components for ease of maintenance - Implement robust error handling across integrated tools |

Now that you know the top MCP servers, you might also want to explore the best AI APIs available today to integrate with your stack and enhance your application’s capabilities.

In this section, we’ll learn how to construct a custom MCP server from scratch. From choosing a framework, installing the MCP SDK, defining protocol endpoints, registering tools, adding middleware, securing your server, and deploying to production. By the end, you’ll have a boilerplate you can extend for any AI-powered application.

Depending on your stack and preferences, you can build an MCP server in Python or JavaScript/TypeScript.

mcp_server=True when launching your demo.First, install the SDK that matches your chosen framework:

# Python MCP SDK

pip install modelcontextprotocolThe official Python MCP SDK lets you build servers exposing data and functionality to LLMs in a standardized way.

# TypeScript MCP SDK

npm install @modelcontextprotocol/typescript-sdkThe TypeScript SDK provides helper classes to define tools, capabilities, and request handlers for MCP servers.

Every MCP server needs at least three HTTP routes:

/capabilities: Lists available tools and their schemas./run: Invokes a specified tool with inputs./health: Simple healthcheck for uptime monitoring.Here’s a minimal FastAPI example based on Anthropic’s quickstart guide:

from fastapi import FastAPI, Request

from modelcontextprotocol.server import MCPServer

app = FastAPI()

mcp = MCPServer()

@app.get("/capabilities")

async def capabilities():

return mcp.list_tools()

@app.post("/run")

async def run(request: Request):

payload = await request.json()

result = await mcp.invoke_tool(payload["tool"], payload["inputs"])

return {"output": result}

@app.get("/health")

async def health():

return {"status": "ok"}These routes follow the MCP specification for server implementations.

Tools are simply functions you register with your MCP server:

# Continuing from the FastAPI example above

@mcp.tool(name="count_letters", description="Count letters in a string")

def count_letters(text: str) -> int:

return len(text)With the Python SDK, decorate any function and it becomes an MCP tool accessible via /run.

In Gradio, you can expose your interface directly:

import gradio as gr

def summarize(text: str) -> str:

# your summarization logic here

return text[:100] + "..."

demo = gr.Interface(fn=summarize, inputs="text", outputs="text")

demo.launch(mcp_server=True)Launching with mcp_server=True automatically adds /capabilities and /run for you.

Enhance your server with middleware for everyday concerns:

fastapi-limiter or koa-ratelimit to protect against abuse.CORSMiddleware and GZipMiddleware for broader compatibility and performance.Protect your MCP server with industry-standard controls:

/run based on tokens—use frameworks like fastapi-security.Choose a hosting platform that matches your latency, scalability, and cost requirements:

/capabilities and /run endpoints.The Model Context Protocol (MCP) has rapidly transitioned from a novel concept to a foundational element in AI infrastructure. As we look ahead, several emerging trends are poised to shape its evolution, enhancing the capabilities and integration of AI systems across various domains.

As MCP solidifies its position as a universal interface, the volume of tool and model integrations will grow rapidly. Without clear standards and disciplined development practices, this can introduce API debt, complicating upgrades and increasing fragility across AI workflows. To understand how this kind of technical debt emerges—and how to avoid it—read more on why API debt is the new technical debt.

Emerging frameworks like ScaleMCP are introducing dynamic tool selection and auto-synchronization capabilities. These advancements allow AI agents to autonomously discover, integrate, and update tools during runtime, enhancing flexibility and reducing manual intervention.

MCP is facilitating more sophisticated multi-agent systems by providing standardized context-sharing mechanisms. This enables coordinated interactions among specialized agents, improving efficiency in complex tasks such as collaborative research and enterprise knowledge management.

Innovations like the MCP-based Internet of Experts (IoX) are equipping AI models with domain-specific expertise. By integrating lightweight expert models through MCP, AI systems can access specialized knowledge without extensive retraining, enhancing performance in areas like wireless communications.

As MCP becomes more integral to AI operations, ensuring robust security and governance is paramount. Ongoing research is addressing potential vulnerabilities, such as prompt injection and unauthorized tool access to establish best practices for secure MCP implementations. For more on how these risks are being tackled in real-world deployments, this article explores the evolving security model around MCP and AI systems.

Beyond traditional tech sectors, MCP is making inroads into industries like finance, healthcare, and manufacturing. Its ability to standardize AI interactions with various tools and data sources is proving valuable for automating complex workflows and enhancing decision-making processes.

Efforts are underway to create comprehensive registries of MCP-compatible tools and services. These registries aim to streamline the discovery and integration of tools, fostering a more cohesive and efficient AI ecosystem.

As we conclude this comprehensive guide on Model Context Protocol (MCP) servers, it's evident that selecting the right MCP server is pivotal for developing AI-ready applications that are context-aware, scalable, and secure. Whether you opt for a managed solution or build your own, it's essential to consider factors like performance, extensibility, and integration capabilities.

In the dynamic landscape of AI application development, maintaining observability becomes crucial. Tools like Treblle offer real-time API monitoring, comprehensive logging, and insightful analytics to help developers quickly identify and resolve issues, ensuring smooth API operations.

By combining the right MCP server with robust observability practices, you're well-equipped to build and maintain AI applications that are both powerful and dependable.

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

Need real-time insight into how your APIs are used and performing?

Treblle helps you monitor, debug, and optimize every API request.

Explore Treblle

AI

AIReducing Time to First Integration is more than speed; it’s a key metric. In this post, discover 6 proven methods, backed by AI and observability, to simplify onboarding, cut friction, and lower integration time without sacrificing reliability.

AI

AIModern APIs are powerful but difficult to integrate. Poor documentation, fragile code, and tool sprawl slow teams down. This article explores how AI is transforming API integration—automating onboarding, testing, and governance to boost speed, reliability, and scale.

AI

AILLMs are calling your APIs, but they don’t always follow the rules. From subtle JSON mismatches to silent data pollution, probabilistic outputs can quietly break your backend. Here’s why contracts matter, and how to enforce them in real time.